Yes, in theory this should work at any scale. The key subjective term of course is “eventually”. You would want the convergence to happen quickly enough for the specific application using it to be useful. The best way to determine this would be to actually write up an implementation and apply it to increasingly complex systems to compare how the convergence time is affected.

Indeed, I mean this is something you’d use if you didn’t have an adequate encoder already made for this environment. This is what you’d use for AGI maybe, but ideally if you had a new environment that you wanted to make an encoder in this could be preprocessed - you could explore the environment at random very fast without any HTM learning, just rapid succession of random movements, then that would give you a baseline of the environments dense representation space and the probability of every part of each of their occurrences (if you didn’t have that information already). With this probabilistic baseline, and the encoder created from it, you could then start your HTM system and theoretically, the encoder would have most of its heavy lifting already done.

Whether we have knowledge of the environment beforehand or not we essentially are simply trying to automate the ‘encoder creation’ process. In order to automate that we need to know all the relevant data our brains use to create an encoder, and we need to know how to use that data to create the algorithm to reduce dense representations into semantic sparse representations.

Thinking about this some more, a Semantic Folding strategy like what is used by cortical.io algorithm would be well suited to cause/effect eligibility traces (that is essentially what they are doing with words – associating them based on how close they are to other words over many input sentences). So rather than modifying the bits of the encoding directly as I described above, instead place and move the inputs around in an evolving “Semantic 2D Map”, and use the map to produce SDRs on the fly.

of course. I should have thought of that a long time ago, you can do with environments, what you do with a corpus of text. mmm. I’ll think about this some more.

So I ran a thought experiment for this.

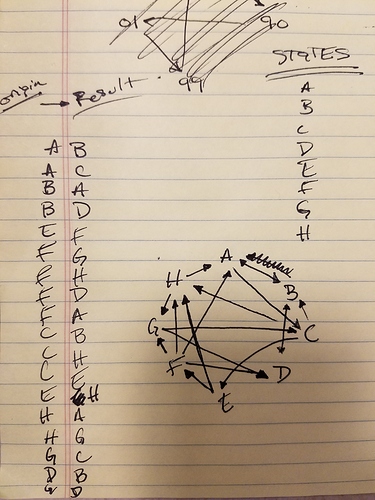

I thought let’s imagine an environment with only 8 states, represented by the letters A through H.

Then I connected them as you can see in the image below (at random).

Then I wrote out all the states, what they lead to, and what leads to them.

Lastly, I produced an SDR for each that encoded the semantics of this system’s causality, but of course not the likelihoods of seeing any particular state since I didn’t create that information first.

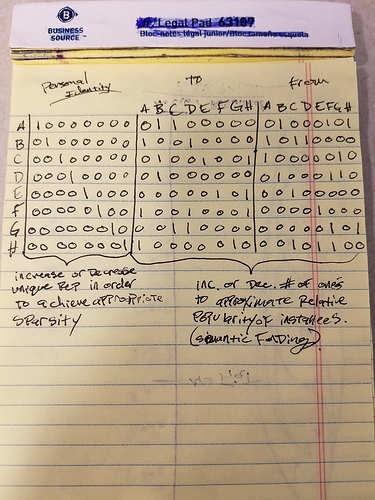

The first portion in this image: personal identity is created in the same manner as described in my previous post above. The “personal identity” portion isn’t sustainable, actually, you can’t have one guaranteed unique bit for every state of a system.

The two and from are simply a mapping of what leads to what. The state E shares a bit with F and C because they both lead to H, it shares a bit with H and B because C leads to all three of those states. So we’ve semantically encoded, in a generic way, the causal map of the system. all that’s left is to, as the notes below describe, increase or decrease the number of shared bits depending on how often one state leads to another based on your particular application. For instance, if E leads to H constantly, but F rarely leads to H perhaps E’s representation can have several 1’s that correspond to “to H” whereas F only has one of those bits on. This is where my hunch came from that we might want the encoder to be able to move columnar connections around. Let’s say you discover H is a very popular node, so you give it more bits in the To representation portions. The columns that were connected to the singular Bit for “To H” should, once you add another bit for “To H”, be connected to that bit as well.

So this is Semantic Folding for causality, nothing fancy. What if the states represented by single letters in this example were actually several characters long? Then the dense spatial representation features could be correlated and involved in the semantic encoding process. I think in any environment that can be traversed causal maps may be a good guide for part of the semantic encoding, but I don’t think that it’s a one size fits all solution. There’s probably other information that can be pulled from the HTM model itself or elsewhere that can help the encoder become more appropriate to the environment as explored by the intelligence.

Also, this is a ‘universal encoder’ but not a ‘universal encoding algorithm’ that can produce highly fine-tuned feature and semantic encoders, like how evolution created our organs to better serve our neocortex. So this is not the end, but I think, a clue in the right direction. Thanks for that Semantic Folding suggestion Paul_Lamb!

From this thought experiment, you are comparing inputs to each other, correct? (just clarifying what you are referring to as “states”). I’m somewhat doubtful that causality just from inputs can be deduced, though (although this is technically what cortical.io does with words, so I could be wrong). It is also highly possible that I misinterpreted what you are thinking

My thought is that a better strategy would be for the environment to provide some feedback to the algorithm, such that “states” (effects) in the system are something separate from the “inputs” (causes) into the system. Ultimately, it is the inputs are what we are trying to encode, not the states. An eligibility trace of inputs would be associated with each other based on resulting states, rather than being associated with each other based on subsequent inputs.

The way I word things may be confusing. Allow me to clarify.

An environment is a set of “states” that the environment can be in, regardless of their dense or sparse representations.

I always see HTM as an organism in an environment - a sensorimotor inference engine. I know Nupic isn’t set up that way yet, but that’s how I think of it, as an organism in charge of its movements, able to have an effect on its sensory input.

So I don’t know how you’re separating “states” from “Inputs” but hopefully now you know what I mean when I say state. I’m not talking about the state of the HTM or anything, I’m talking about its sensory input from the environment, whether that’s a static thing like server data that it can’t control or sensory input from a camera it can move around a room.

I see – in a sensory-motor system then what is sensed next is at least partially an effect of behavior (which is itself derived from what is sensed), so I see where you are coming from. In that case, causality from just the sensory input makes more sense.

Where I am doubtful about this is in an implementation where the system does not interact with the environment but is just analyzing an input data stream (current HTM applications like making predictions, anomaly detection, etc). I am doubtful that semantics can really be derived by just looking at the inputs alone and their proximity to each other (although that is what cortical.io does with words, so I could be wrong). It would make more sense if there was some feedback about the state of the system where the data stream is coming from (like “booting up”, “installing updates”, “shutting down”, etc) and use that to associate the inputs (“CPU temperature”, “memory utilization” etc) based on their proximity to similar states (versus their proximity to each other).

Another way to think about my concern is the fact that a “state” really contains much more information than what can be derived from the sensory input alone. Of course in the real world, it is impossible to know everything about a particular state. Would having access to more state information improve the encoding of semantics, or would the extra complexity just add more noise or increase the time to reach stability? Maybe there is a “sweet spot” where a certain level of complexity tends to work best. Some interesting things to explore if this leads to an actual implementation.