Yeah! Great art as well as beautiful scientific discoveries, they come to you, according to no one’s plan. Comforting enough, at least.

Well a “stacking” mechanism “similarity” could be whatever you want in principle, yes. That then generates the “feeling-of-similarity”, so you might be right in that sense. But that’s not the same as saying we humans get to choose what “feeling-of-similarity” we’re going to perceive. I think we’re locked in to this shared context/prediction grouping property. That’s something specific. We will only perceive objects in the world if they share some kind of predictive destiny. Like the different atoms in a football, say. In fact, but also perceptually for our visual system, what makes them an object will be that the different parts of a football tend to share contexts and predictive effects.

But that’s not the only way it could be done. Another one of my favourite references on this is some work done on “reverse engineering” I saw in a talk some years back. In this talk, I see the presenter proposing dynamic, generative, relational principles to generate new perceptual “objects” (as I recall… locality preservation, and… some kind of fractal transform… or maybe it’s the fractal transformation which is the locality preserving one!) It’s only because the dynamic generative way he determines “objects” is a dynamical generative property like the grouping principles I am proposing, that I see what he is doing as an analogue to human perception. These “objects” and the relational principles on which they are generated, are totally non-human. Humans cannot see them. But he finds them useful for other purposes. Purposes humans have not evolved to carry out effectively. For instance, he uses them to find images in binary data. Or crypto-graphic keys. Stuff humans are completely blind to. At best learning a few common patterns with time. It’s a completely inhuman relational principle. Like the mechanism I’m suggesting for human perception, it also constantly generates new “objects”, but these are non-human objects.

I think of it as examples how an alien intelligence might differ from ours (at least in the sense of the way it might resolve the world into perceptual objects.

Christopher Domas The future of RE Dynamic Binary Visualization

Just a comment on this @cezar_t . What you say about reservoir computing needing to be below the level of chaos may be true because reservoir computing is just a hash. But the “enhancement” I suggest developed on top of it would not just be hashing. It would actually be “stacking” past events in new ways. I think these ways could be different attractors of a chaotic system. There’s no reason why they shouldn’t, anyway. Because you do want new structure with these new “stackings”. With reservoir computing you probably don’t want actual new structure. You just want a maximally sensitive hash to known structure. But with this enhancement, it might (occasionally?) step over the line and generate new structure. And this new structure could be at the same time new, chaotic, and meaningful, meaningful because there would be meaning in the way of generating novel combinations.

sounds like this guy is putting in a lot of effort into making hacking/reverse engineering actualy look like what we see in movies… I love it.

Yeah. Very cool. And I love thinking about the objects he generates as perceptual objects for some potential alien being.

But the really cool thing will be when we implement this for a system which works like human vision!

Something which generates “objects” that correspond to objects that people see. Generating them. Novel objects. The way the objects Domas generates are novel, but still meaningful.

Something that doesn’t depend on trying to label every last speck of dust on the planet so it can be “learned” beforehand, the way we do now.

I see what Tesla is doing, heaps of money thrown at artificial situation generators, to generate beforehand every conceivable thing that might happen, so every conceivable thing can be “learned” before it happens.

Or language transformers, only novel by interpolation over stuff that’s been said before.

So much money and effort being wasted.

Similarity in this context is about metrics. Or measurable distance between two arbitrary data points.

Define some metrics for inputs, some (same or different) metrics for outputs. Then “similarity is preserved” simply means that when

Din(A,B) < Din(C,D)

then

Dout(f(A),f(B)) < Dout(f(C), f(D))

Where

- A,B,C,D are arbitrary input data points,

- f() is a function that propagates similarity e.g. f(X) is the output of input X,

- Din(X,Y) is distance between X and Y points from input space,

- Dout(U,W) is distance between U and W points from output space

Sure the actual metrics can be arbitrary but when you apply them consistently and function f() really preserves similarity, you might be surprised to notice it does it for many distance functions

PS hash functions in general do not preserve similarity, e.g. there are cryptographic hashes that are explicitly designed to do the opposite. Those who do, are locality-sensitive hashes.

Also there is a bit more to reservoirs than just hashes . They can be used for prediction and generating sequences that look like inputs. Which means they are able - when paired with simple learners - to model the “outside world”.

Lets say we have a time series with single scalar value e.g. temperature which is fed into a reservoir, and reservoir’s state (or output) is used to train a simple regression ML model that predicts the next temperature reading. So here we have prediction. And we can feed back the predicted temperature as input and predict temperature at next time step, and so on. Of course for each new loop the predicted temperature drifts more and more from the “real” one, but if we compare plots/charts of self-predicted loop (imagined future) with real data we often see they look similarly which means the reservoir-predictor pair (not reservoir alone) is modelling (mimicking) the outer process that produces fluctuations in temperature.

Not quite true for what I’m proposing @cezar_t . For most of what is done for training conventional neural nets, yes. But not for the similarity I’m proposing.

The similarity I’m proposing is one of shared context.

I don’t think shared context (connectivity) can be plotted as distance between points in a space.

I might be wrong. Perhaps you can show me how.

Like the clusters in the Brazilian paper. Can the clusters in the Brazilian paper be characterized as having similar distance between two points?

I think the difference is something like that between a cosine or dot-product and a cross-product. The cross-product doesn’t project dimensions on each other, it generates new dimensions. Things are similar, but similar in a new way. Not similar by sharing some old way.

I just clarified what a function that preserves similarity from inputs to outputs means.

Keep people informed upon (my understanding upon) how reservoir computing is used in many, if not most researches.

This meaning isn’t limited to neural nets. EG it is used in dimensionality reduction, reverse image search or word embeddings (glove, word2vec.).

Why not, e.g. glove explicitly defines similarity between two words as how often they-re found in same or close contexts. The resulting vectors (aka embeddings) of synonyms are very close to each other.

I’ll have to check the Brazillian paper you mentioned.

Anyway however you define similarity, if you want that definition to be useful, you’ll have to be very specific so it can be measured / computed.

PS I guess the wikipedia page on reservoir computing is more useful illustrating the core ideas and various approaches to implementation

Mark

What is reservoir computing?

Reservoir computing is a type of machine learning technique that is used for processing sequential data. Unlike traditional neural networks, which require significant amounts of training data and time to adjust their weights, reservoir computing uses a fixed, randomly generated set of connections between input and hidden layers called the “reservoir.”

The input data is fed into the reservoir and allowed to reverberate through the network, resulting in a complex pattern of activations that can be read out by a linear output layer to produce the final output. The output weights are the only part of the network that are trained, making it much faster and easier to train than traditional neural networks.

Reservoir computing has been used successfully in a wide range of applications, including speech recognition, time-series prediction, and control systems. Its ability to process sequential data in real-time with low computational cost has made it a popular choice for tasks such as signal processing and robotics.

Mark

Can the reservoir be sparse?

Yes, the reservoir in reservoir computing can be sparse. In fact, the sparsity of the reservoir is one of its key features that makes it computationally efficient and easier to implement.

A sparse reservoir is one in which only a small fraction of the possible connections between neurons in the input and hidden layers are active. This sparsity can be achieved by randomly pruning connections in the network or by using specific algorithms to generate sparse connections.

Sparsity has been shown to have several advantages in reservoir computing. First, it reduces the computational complexity of the network, making it easier to implement and faster to train. Second, it allows for a better separation of input patterns, which can improve the network’s ability to classify or predict different input sequences. Finally, it can improve the robustness of the network to noise and other types of input variability.

Overall, sparsity is an important design feature in reservoir computing, and researchers continue to explore the best ways to generate and optimize sparse reservoirs for different applications.

Mark

What do you mean when you say reverberate?

In the context of reservoir computing, “reverberate” refers to the way that input signals propagate through the network and create a complex pattern of activations that can be used to generate the final output.

When the input data is fed into the reservoir, it interacts with the randomly generated connections between the input and hidden layers. These interactions cause the input signals to be transformed in a nonlinear way, resulting in a complex pattern of activations that reverberate through the network.

This reverberation allows the network to integrate information from multiple time steps of the input sequence and create a rich representation of the input data. The output layer of the network then reads out this representation and uses it to generate the final output.

The term “reverberation” is often used because the pattern of activations in the network can persist for a short period of time after the input signal has been removed, similar to the way that sound waves can reverberate in a physical space. This persistence of activity allows the network to process sequential data in real-time and make predictions or classifications based on the current and past inputs.

Mark

Does this imply that it has to be used with sequential data?

Reservoir computing was originally designed to process sequential data, but it can also be used for other types of data. In fact, one of the advantages of reservoir computing is its flexibility and ability to handle a wide range of input types and modalities.

While the original application of reservoir computing was focused on time-series data, researchers have also explored its use for other types of data, such as images, graphs, and text. For example, reservoir computing has been applied to image classification tasks by using the input pixels as a sequence of inputs, and to natural language processing tasks by using the words in a sentence as a sequence of inputs.

In general, reservoir computing can be used for any task that requires the processing of complex input data, regardless of whether the data is sequential or not. However, the specific design of the reservoir and the readout layer may need to be modified to accommodate the particular characteristics of the input data.

Mark

What is the best method to train the output layer?

The output layer of a reservoir computing network is typically trained using a linear regression method. The goal of training the output layer is to learn the weights that map the pattern of activations in the reservoir to the desired output.

The training process involves presenting a set of input sequences to the network and computing the corresponding reservoir activations. These activations are then used to train the output layer using linear regression or a similar method, such as ridge regression or Lasso regression. The trained output layer can then be used to generate the final output for new input sequences.

Linear regression is a popular choice for training the output layer because it is computationally efficient and can be easily implemented. It also allows for a straightforward interpretation of the learned weights, which can provide insights into the underlying relationship between the input and output variables.

However, other methods for training the output layer have also been explored, such as support vector regression and neural networks. The choice of method may depend on the specific characteristics of the task and the amount and complexity of the training data available.

Overall, linear regression is often considered to be the best method for training the output layer of a reservoir computing network due to its simplicity, efficiency, and interpretability.

Mark

Can I use hebbian learning?

Yes, Hebbian learning can be used to train the connections within the reservoir of a reservoir computing network. Hebbian learning is a type of unsupervised learning that modifies the weights between neurons based on their activity patterns. The idea behind Hebbian learning is that when two neurons are activated at the same time, the connection between them should be strengthened, and when they are not activated together, the connection should be weakened.

In reservoir computing, Hebbian learning can be used to adjust the weights between the neurons in the input and hidden layers of the network. This can allow the network to adapt to the statistical properties of the input data and generate more effective reservoir activations for a given task.

However, it’s important to note that Hebbian learning can be slower and less stable than other training methods, such as linear regression. It can also be more difficult to control and interpret the resulting weights. Therefore, the choice of training method may depend on the specific task requirements and the available training data.

Overall, while Hebbian learning is a valid option for training the connections within the reservoir of a reservoir computing network, it may not always be the best choice depending on the specific needs of the task at hand.

Mark

How about back propagation?

Backpropagation is typically not used to train the connections within the reservoir of a reservoir computing network.

The reason for this is that backpropagation requires a feedforward neural network architecture with a fixed number of layers and connections, whereas reservoir computing is based on a recurrent neural network architecture with randomly generated connections. In a reservoir computing network, the connections between the input and hidden layers are fixed and randomly generated, and only the weights between the hidden layer and the output layer are trained.

Therefore, instead of backpropagation, the connections within the reservoir are typically trained using unsupervised learning methods, such as Hebbian learning, or they are randomly generated and kept fixed throughout the training process. The output layer of the network is then trained using a linear regression method, as described in the previous answer.

However, there are some variations of reservoir computing that incorporate feedback connections and allow for a form of backpropagation-like training, such as echo state networks with feedback and liquid state machines. These variations may use feedback connections to allow the network to learn more complex dynamics and patterns in the input data, and may use a form of backpropagation to train these feedback connections. However, the overall architecture and training methods used in these variations of reservoir computing are still fundamentally different from standard backpropagation-based neural networks.

Mark

Are there any methods based on one shot or even zero shot learning?

Reservoir computing is typically not associated with one-shot or zero-shot learning, as it is a form of supervised learning that requires labeled training data to learn the mapping between inputs and outputs. However, there are some variations of reservoir computing that can be adapted for few-shot learning and zero-shot learning tasks.

Few-shot learning involves training a model to generalize to new classes with only a few examples, while zero-shot learning involves training a model to recognize new classes without any examples. These types of learning can be challenging for traditional supervised learning methods, but they have been explored in recent years using techniques such as meta-learning and transfer learning.

One approach for adapting reservoir computing for few-shot learning is to use a small subset of the labeled training data to initialize the reservoir weights, and then fine-tune the output weights using the remaining data. This approach has been explored in some studies and has shown promise for few-shot learning tasks.

Another approach for adapting reservoir computing for zero-shot learning is to use a semantic representation of the output classes, such as word embeddings or attribute vectors, to train the output layer. This allows the network to generalize to new classes based on their semantic similarity to the training classes, rather than relying solely on the input data.

Overall, while reservoir computing is not traditionally associated with one-shot or zero-shot learning, there are some adaptations and variations that can be used for these types of tasks. However, the effectiveness of these methods may depend on the specific task and the available data.

Mark

Are there any similarities to transformer architecture?

While reservoir computing and transformer architectures are both based on neural network models that can process sequential data, they have some fundamental differences in their design and training methods.

Reservoir computing is based on a recurrent neural network architecture with randomly generated connections between the input and hidden layers. The reservoir is a dynamic system that generates complex temporal patterns in response to the input data, which are then fed into a linear output layer for classification or regression.

Transformers, on the other hand, are based on a self-attention mechanism that allows the network to attend to different parts of the input sequence and capture long-range dependencies. Transformers have been shown to be highly effective for tasks such as natural language processing, where the input data consists of sequences of words or tokens.

However, there are some similarities between the two architectures. Both reservoir computing and transformers can handle input sequences of varying lengths, and both can capture complex temporal patterns and dependencies within the input data. Additionally, both architectures can be adapted for tasks such as classification, regression, and sequence-to-sequence prediction.

Moreover, recent research has explored the possibility of combining elements of both architectures, such as using a learned attention mechanism in the reservoir of a reservoir computing network, or incorporating a reservoir-like structure within a transformer architecture. These hybrid models have shown promise in certain tasks and are an area of active research.

Overall, while there are some similarities between reservoir computing and transformer architectures, they have different underlying principles and design choices, and each approach may be better suited for different types of problems and data.

Mark

Is there a possible cross-over to transformers?

Yes, there is a possible cross-over between reservoir computing and transformers. Both approaches are based on neural network architectures that can handle sequential data, although they differ in their specific design and training methods.

Reservoir computing is based on a recurrent neural network architecture with randomly generated connections between the input and hidden layers. The reservoir is a dynamic system that generates complex temporal patterns in response to the input data, which are then fed into a linear output layer for classification or regression.

Transformers, on the other hand, are based on a self-attention mechanism that allows the network to attend to different parts of the input sequence and capture long-range dependencies. Transformers have been shown to be highly effective for tasks such as natural language processing, where the input data consists of sequences of words or tokens.

While the specific design and training methods of reservoir computing and transformers are different, there are some potential cross-overs between the two approaches. For example, the random connections in reservoir computing could be replaced with a learned attention mechanism inspired by transformers, allowing the network to attend to different parts of the input sequence and generate more informative reservoir activations. Similarly, the self-attention mechanism in transformers could be combined with the linear output layer of reservoir computing to generate more effective output predictions.

Overall, while there are some potential cross-overs between reservoir computing and transformers, it is an area of active research and more work is needed to explore the potential benefits and drawbacks of combining these approaches.

Is this ChatGPT answering?

Yes.

I am using it almost daily to develop a feel for what it can and cannot do.

I try to ask it about things that I think I know so I can get a feel for how well it does in coming up with answers.

In general it knows “about” things; it is just very iffy about the exact details. Considering that it is abstracting a statistical choice of the words that appear in a subject area, it will be able to offer the central tendency in general terms. For this to work, there will have to be a lot of similar things said about the thing I am asking.

For obscure things where there are not a lot of words said about the thing I am asking, it tends to be “off the wall” and occasionally, a total confabulation.

I double check any reference it offers and a surprising fraction of named references are totally bogus. If I am not too specific on the search terms I usually find that there are a lot of papers “like” the reference offered, just not the named author or the exact named paper. It feels a bit like when I am searching for a paper that I have saved on my hard drive where I can’t remember the exact name or who the author was; “I remember reading a paper about that!”

Most of the people here know about the reservoir computing method. Feel free to judge for yourself, but I am in agreement with the answers it offered. I pushed it at the edges to see what it would do - I am satisfied that the answers it offered were appropriate.

I particularly like how it keeps the context of the conversation. I have not really seen that in prior chatbots that I have played with. That is one of the features that I try to push in conversations and so far - it has done a really good job of following the focus of a discussion. Notice that I only used the word reservoir twice directly in the start of the entire exchange. It did not jump to transformers when I introduced that.

Look at how well it answered the “crossover” question. It knew that the main topic was reservoir and did a fair job of identifying the key elements that corresponded and what part of “this one” would work in “that one.” Color me impressed.

You’re right. I used a similarity metric for my first attempt at this “amplification” of predictive coding. The “vectors” I tried to use to condense the network connections, had values based on similarity metrics. I used some variations of my own, and then settled on one by Dekang Lin:

Dekang Lin, An Information-Theoretic Definition of Similarity, In Proceedings of the 15th International Conference on Machine Learning, 1998, pages 296–304, Morgan Kaufmann.

How that’s applied is described in my old Vector Parser paper, earlier pre-print here:

Parsing using a grammar of word association vectors

But, obvious in hindsight, I later realized that a single similarity value washed out a lot of structure. I thought I was capturing different dimensions which could be constructed by combining two vectors. But two words can be similar in some contexts and different in others. I finally came to the conclusion it is not possible to abstract the context information more compactly than the network itself.

Each projection you make from that network can be a kind of similarity metric on it. But it is the number of… dimensions of such similarity which can be projected out which really interest me.

So, yes, you’re right, similarity of context will define a kind of metric on sub-sequences. But that similarity won’t be fixed. I think the dimensions possible may be infinite. Or at least as big as all different possible combinations of contexts of said sub-sequences.

I don’t know. Is that the same as talking about distance between two points. Perhaps. It is just that the actual distance measures will vary wildly, and unlike ANNs and “learning” I think that wild variation is the interesting bit, not the similarity itself.

Reference again:

A Network of Integrate and Fire Neurons for Community Detection in Complex Networks, Marcos G. Quiles, Liang Zhao, Fabricio A. Breve, Roseli A. F. Romero [https://www.fabriciobreve.com/artigos/dincon10_69194_published.pdf ]

I agree that the big problem with these transformer chatbots is that they completely deadpan, jam absolute fictions in with a lot of facts.

In experiments I did it seemed more work to pick apart what might be false, than it was to use a conventional search engine.

Why deliberately mix your search results with random falsehood? It means you have to laboriously check everything. And some falsehood might slip through.

Anyway, on the relationship between transformers and reservoir computing.

From memory:

The parallel of reservoir computing as a kind of RNN seems valid.

I’m not sure there is any “learning” taking place in reservoir computers though. Is there? No deliberate structuring, anyway? The whole idea of a reservoir in my understanding is that it is unstructured, and dynamic.

(The main difference I recall between HTM and traditional ANNs was also this focus on a “learning” mechanism. HTM rejected, back-propagation specifically(?) as biologically implausible. Maybe HTM has also drifted in the direction of internally structuring in favour of “learning” recently. I haven’t kept up. But originally it benfitted from not having the same constraints for “learning” as ANNs.)

Anyway, from my point of view, it was the “learning” constraint which crippled RNNs. Originally the problem was that RNNs could not be learned. This was how I remember them being conceived through the 1990s: a solution to model language in principle, but in practice, unlearnable.

As I understand it, the history through RNN, to LSTM, to now transformers, was basically one of reducing an infinite context. To make RNNs learnable.

LSTM (Schmidhuber?) was the first to do this by reducing the infinite context to a single… vector(?) carried forward.

But with LSTM you were forced to carry the context forward all the time. And that context would get overwritten.

The big advance of transformers, as I understand, was that the “attention” mechanism essentially put the focus fully on arbitrary context, and removed the whole “carry it forward” burden. So transformers kept the advantage of LSTM over RNN, that it did not require the system to keep an infinite context. But it lost the disadvantage of LSTM that context was constantly overwritten as it was carried forward.

So the historical progression from RNN, to LSTM, to transformers, was all about innovations in ways to deal with “learning” over what is an essentially infinite context of a whole sequence.

If you don’t try to “learn” though, you can pare it back to the metal. You can have things dynamic as in reservoir computing. You can keep that infinite context. And you can structure over it, and structure dynamically too.

Which I think is necessary, because all the different ways of structuring may be chaotic.

All you need is a meaningful prior to do the structuring.

Notice also though that it is the way they handle novelty which is the big issue with transformers.

This is also my focus. How to deal with novelty. How can we constrain novelty to be “meaningful”? What makes novelty “meaningful”?

I think novelty is at the core of it. Transformers just interpolate between learned forms. At the limit (“not a lot of words said about the thing I am asking”, not enough data points to get a good similarity overlap?) their interpolation is random.

By contrast, I make novelty central. It’s not the point at which similarity metrics start to fade and become random anymore. “Meaning” is no longer only by reference to what is already known, equated to a similarity metric with a standard/label. The essential operation isn’t comparison, but recombination. I don’t need a lot of existing data points to specify a concept. I actively generate new “concepts” as new combinations of contexts. I replace “learning” with the construction of novel (even chaotic) groupings. And I do that by dynamically projecting out different groupings of contexts.

The fact that shared context is in itself meaningful, makes all these new combinations of contexts, inherently meaningful, even though they are new.

I observed that as well, but it quickly shifts context as it makes things up. Still impressive though.

I feel this very inspiring! Yet the problem definition may/shall shift to “to make meaning of machine-models converge to human-compatible comprehensions” ?

Novel utilities are great things so long as they are for human purposes, at least comprehensible / controllable by humans.

Apparently we can label things per our knowledge, though I’m afraid we don’t really know “how” such knowledge is represented/possessed in our mind, thus to grant nonhuman mechanisms the same ability “to know” the “label” per specific inputs.

Numeric methods (among which back-propagation is classic) can make machines mimic our reasoning per trained from labels we gave them, then nevertheless, it seems “similarity” should be inherently multi-dimensional, and each “scalar numeric distance metric” reflects one particular aspect of similarity (as far as an observing subject concerns), then can I say:

“higher intelligence” can discover/know the “similarity-metric-dimensions”, while “lower intelligence” just computes each dimension’s “scalar-metric-function” ?

“what to metric” w.r.t. similarity would ultimately fall onto “purpose making” ? I.e. what are real concerns, what’s not, e.g. survival, pleasure, joy.

True. But dealing with language helps here. Because with language you are driven to find groupings which are meaningful for humans. I would say that’s because language is the data which is (most directly?) generated by the brain itself. So it makes sense that its structure, matches the way the brain likes to structure data.

Also, the brain is structuring this stuff, language, with the express purpose of making it meaningful to another individual. Another reason for it to structure language in the way the brain finds meaningful.

I don’t think there is anything particularly mysterious about how the brain finds meaning in things, anyway. Or perhaps it seems that way to me because I’m used to the way language manifests structure. Either way, it seems obvious to me that the brain structures the world in ways which help it predict cause and effect: grouping things which share contexts/predictions.

We don’t know. But I’m guessing “sets of things that share cause effect predictions.”

It works for language anyway. The sets it generates are quite meaningful. Lots of words with the same or similar meaning, etc.

The only trick which has foxed us historically, is that those sets turn out to contradict! That observation broke linguistics in the 1950s! It’s still in pieces.

Cause effect prediction seems to fit a plausible high priority “purpose making” quite well to my mind.

Anyway, it works as a principle to structure language in ways that match “meaningful” groupings that humans make over the same data.

Yes. I agree. Mere mimicry is a low level of intelligence. If it is intelligence at all. And that is what our tech is doing at the moment. Intelligence proper must be connected with novelty, multi-dimensionality, and especially finding new dimensions. It is my feeling that this multi-dimensionality is somehow connected to the generation of contradictions. I think these contradictions will turn out to be a feature not a bug. If we didn’t have them then at some point all meaning might be complete, and there would be nothing more to know. But you can’t “learn” this, or especially you can’t discover/know new “dimensions” unless you have a relational principle. Well… I don’t know. Perhaps you could “learn” the relational principle. But if it generates contradictions, then “learning” is going to meet a problem. Things will wash out. Unless you keep enormous amounts of context information to separate all the contradictions. And you certainly couldn’t “learn” all such sets, if you just kept on finding new ones!

The foundation of language is grounded in the brain’s structures that are most closely associated with motor-sensory aspects of objects. The Chomsky “built-in” language features are certainly built around this grounding. While you are at it, look at figure two of the linked Pulvermuller paper and reflect on how it suggests an RNN architecture.

Very nice paper Mark. Thanks for that. That’s the most interesting paper I’ve read in a long time! : - ) I’ve seen very few (if any!) which map language mechanisms to neural connectivity.

I wouldn’t argue with where semantic meaning is stored, especially in the sense of qualia, and the “feeling of being there” which @complyue described as the subjectively intuitive quality of elements we “recombine” when we “think”.

It’s how they recombine which interests me.

Actually, what I’m arguing has a very close analogue in that paper. Exactly figure 2. which you cite as having a resemblance to an RNN below:

Very nice. That figure 2 is work taken from exactly what I identified as the most interesting paper for me cited in that review.

Discrete combinatorial circuits emerging in neural networks: A mechanism for rules of grammar in the human brain? Friedemann Pulvermüller, Andreas Knoblauch

Difficult to find without a paywall, everywhere has scumbag “publishers” trying to clip a ticket… but I finally found it in… Russia! (Bless them for this less violent example of international delinquency!)

https://sci-hub.ru/10.1016/j.neunet.2009.01.009

They say:

“In this present work, we demonstrate that brain-inspired networks of artificial neurons with strong auto-associative links can learn, by Hebbian learning, discrete neuronal representations that can function as a basis of syntactic rule application and generalization.”

Similar to what I’m saying.

And how do they do that:

“Rule generalization: Given that a, b are lexical categories and Ai, Bj lexical atoms

a = {A1, A2, . . . , Ai, . . . , Am}

b = {B1, B2, . . . , Bj, . . . , Bn},

the rule that a sequence ab is acceptable can be generalized from a

set of l encountered strings AiBj even if the input is sparse”

So, I understand this to say, working from the other direction, that given an observed set of encountered strings AiBj, even if it is “sparse”, so not observed for all i and j, you might support other combinations between AiBj, even if they are not observed.

This appears to be is just my AX from {AB, CX, CB} taken from my discussion in this thread above (oh, actually not in this thread? In @JarvisGoBrr 's “elaborator stack machine” thread: The elaborator stack machine - #5 by robf)

It’s all very nice. I hadn’t seen a neural mechanism conjectured on this basis before. And it is very similar to me. (Which is actually not surprising, because the whole distributional semantics idea has been the basis of attempts to learn grammar for years. It’s in their glossary.)

The difference from me seems to be that they conjecture a neural coding mechanism for abstracted categories based on sequence detector neurons. Observed sequences are explicitly coded in sequence detector neurons between word representations, and then categories are abstracted by forming interconnections between the sequence detector neurons. So an abstract syntactic category is represented physically in interconnected sequence detector neurons. This might indeed have a close RNN analogue.

By contrast, while I agree that syntactic categories can be generated from overlapping sets of their AiBj type, this is just distributional semantics, I think those overlapping sets will contradict. That’s the big difference. I think the sets will contradict. So it will not be possible to represent them using fixed sets of sequence detector neurons. Instead I say the “rule” will need to be continually rebuilt anew by projecting out different overlaps between the sequence sets which vary according to context (plausibly, using oscillations to identify closely overlapping sets.)

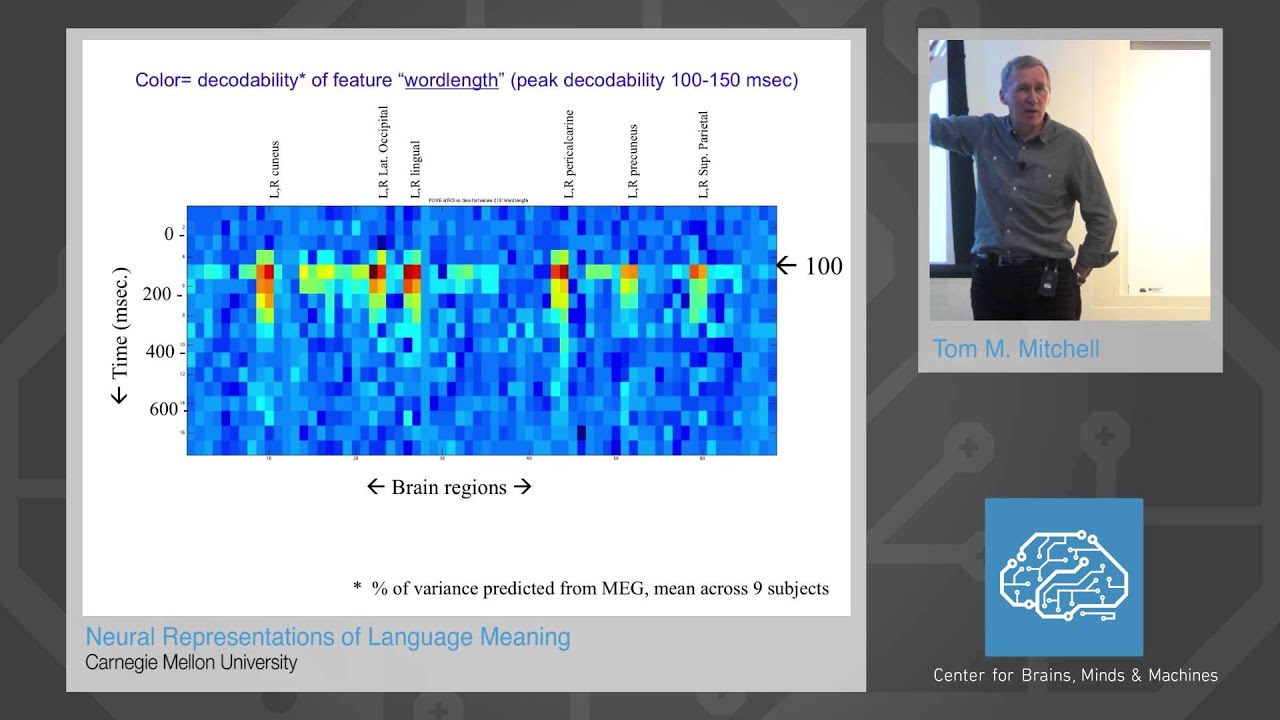

I would justify that constant re-organization idea by citing evidence that neural activations for sequences of words are not static combinations of their individual word activations and any mutual activation between a static set of sequence detector neurons. My favourite example is in work discussed by Tom Mitchell here, the complete reorganization of activation patterns. Surprising! Indicating that patterns of neural activation for word combinations seem to completely re-organize individual word activation patterns:

Prof. Tom Mitchell - Neural Representations of Language Meaning

But the basic idea of a separate “combinatorial semantics” area, where new categories are synthesized by combinations of sets of observed sequences is the same in my thesis and the RNN like mechanism described in this paper. Very nice. Good to see we’re on the same track.

To repeat, the only difference with what I am suggesting, and what is happening in this paper, and probably happening in transformers, is that I say the sets will contradict. So we must model them by finding context appropriate sets associations at run time. Perhaps by as simple a mechanism as setting the sequence network oscillating, and seeing which sets of observed sequences synchronize.

Nobody has imagined contradictory meaning sets before. And they still don’t. Which is holding us back. But trying to abstract language structure drives you to it. If you have eyes to see it. And once you’ve seen these contradictions appear in language structure, you start finding them all over the place. (For instance there’s an analogue in maths. Chaitin: “Incompleteness is the first step toward a mathematical theory of creativity…” An Algorithmic God | Gregory Chaitin | Inference)

I don’t think there is anything particularly mysterious about how the brain finds meaning in things, anyway. Or perhaps it seems that way to me because I’m used to the way language manifests structure.

It’s still rather mysterious to me, I know language DOES manifest structure, but am feeling those structures quite concealed to me, looking forward to hear more about “the way” from you!

the brain structures the world in ways which help it predict cause and effect: grouping things which share contexts/predictions.

I think Buddhism is at an extreme in believing cause and effect (Karma), as the relationship not appearing perfectly absolute and exact, if only seen from one man’s entire lifetime, they turn to samsara for an explanation, which has no scientific proof to date.

Besides the relief of certain mental pains by such a belief, I wonder if Buddhists are actually correct about cause&effect being an essential primitive of the conceivable world, leaving science to catch up, maybe after hundreds or thousands of years?

Buddhism is at least so great in settling contradictions happened mentally.

… this multi-dimensionality is somehow connected to the generation of contradictions. I think these contradictions will turn out to be a feature not a bug.

…

Perhaps you could “learn” the relational principle. But if it generates contradictions, then “learning” is going to meet a problem. Things will wash out. Unless you keep enormous amounts of context information to separate all the contradictions. And you certainly couldn’t “learn” all such sets, if you just kept on finding new ones!

I perceive this as a really insightful description of how our AIs are doing today. They are kinda free of mathematically-strict semantics implemented by digital computers, which in turn are based on contradiction-averse-math, but still rely on such computer systems to prepare/store/process their input/label/output data.

Maybe contradictions can only be a “feature” when represented by data/structures other than digital forms?