Thanks for the quick feedback

To students of HTM school, and others here even slightly interested in the theory, it may be obvious that I am trying to replicate the mouse grid cell experiment of Moser and Moser. If such a… simple (heh) experimental result can be duplicated in some sense using HTM.core, I would feel much more confident in the architecture everyone discusses here.

----------------------------------------------------------------------------------------------------------

The (two-dimensional) experiment so far:

One single Cortical Column

Feedfoward input to L4 and L6a:

The ‘mouse’ casts rays to the walls of a ‘box.’ Each ray returns the distance to the wall. That distance, plus the egocentric angle of the ray are encoded. The encoded input of all rays is concatenated into a single ‘sensory SDR’ and pooled to layer 4

The mouse also moves at a constant speed forward. It’s xy direction of motion is pseudo-randomly generated using perlin noise. Every ten timesteps, the egocentric distance travelled, as well as the change in ‘head direction’ are encoded and pooled to layer 6a.

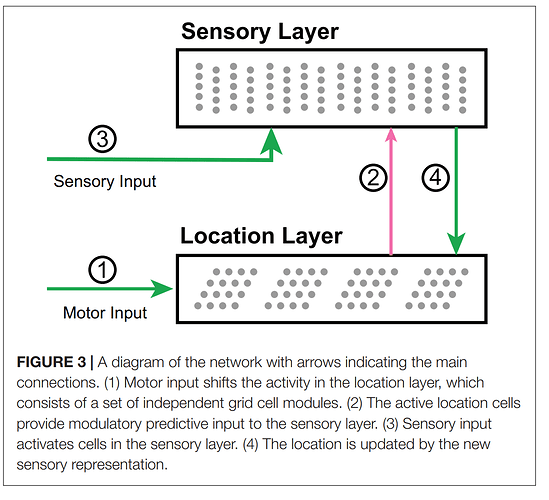

Contextual Input between L4 and L6a:

In intermediate steps, the external active cells and winners from L6a are passed to L4, and the activation of L4 temporal memory cells are computed on L4 active columns. Vice versa for L4 to L6a.

---------------------------------------------------------------------------------------------------

I had been hoping to get some semblance of grid cells in L6a with this architecture. The results are interesting, but I haven’t yet achieved the main goal.

At this point I am considering extending to an object layer, L2/3. I am unsure, though, with which active columns to compute L2/3 temporal memory cell activation. Those from L4 (i.e. L4’s spatial pooler)? Perhaps I should spatially and temporally pool the active cells of L4…

My other option is to begin tweaking the parameters of my current L4/L6a layers.

Play with topology a bit perhaps.

My other idea is to introduce some form of ‘motivation’ or ‘incentive’ for the mouse, rather than random motion. I suspect adding this concept to the simulation may introduce, let’s say, local maxima to cell activation.

…but I don’t know, so I figured I would run it by the community. See what you all think