Check out what this old guy has to say in line with HTM.

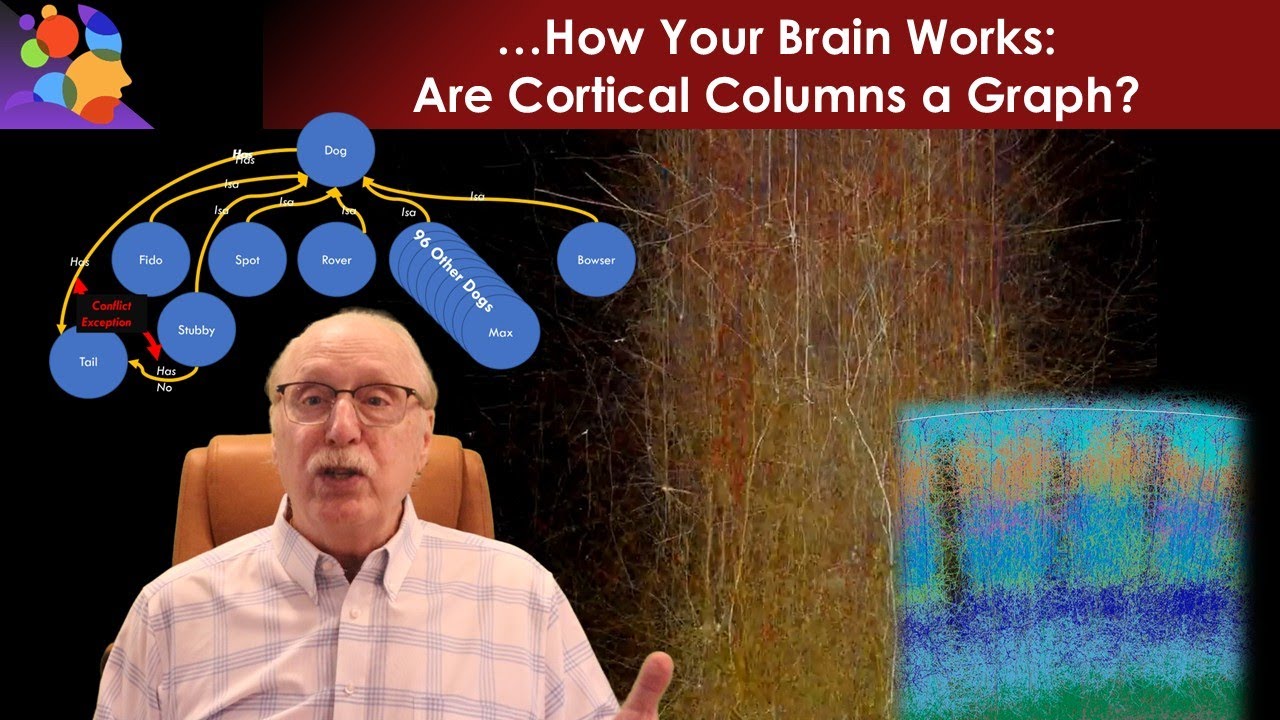

This is seriously on the money. It brings together cortical columns and knowledge representation, and shows why LLMs can never do this.

There is more: https://www.youtube.com/@AINeuroInsight.

In the last video the presenter states: “Knowledge is a graph of relationships complete with inheritance and exceptions.” Ok, I agree. But he skipped a detailed description of how to encode semantic content. Jeff Hawkins suggests receiving sensory input through time and space is where meaning arises. (See “JEFF HAWKINS - Thousand Brains Theory” https://www.youtube.com/watch?v=6VQILbDqaI4) Intelligent machine learning may have to start with path integration through physical space and time in order to generate infrastructure that could later be re-purposed for symbolic relationships.

- Science rules. I have no interest in intelligence explained or related to introspection, so words like ‘meaning’ do not figure. I want to understand, explain and replicate the intelligence of a small animal, such as a mouse, bat or bird, based on science.

- Columns matter. A lot. But all they can do is execute an algorithm, and if we knew what that is we would know a lot. I don’t find what I read in Thousands Brains compelling.

For me, the key observation is that brains model reality. A simple example: you see a ball thrown in the air, and in seconds you predict where it came from, where it will land, whether you can catch it and the steps needed to do so. This depends on the ‘continuous stream of sensorimotor information’ – we agree on that, but it uses that information to run the model, forwards and backwards in time.

I like what the video says about a tree structure, but it’s not enough. It gives no hint of how to model reality.

[BTW conscience is just the brain modelling itself!]

Human meaning = machine encoding. Machine encoding at scale is the challenge. In the referenced video Jeff Hawkins goes through his example of fingers moving over a coffee cup to make an identification. I surmise machines could adopt a similar process of gathering information about the outside world by attaching time and space coordinates to input stimuli and building a graph.

A column is a cluster: receptive fields of neurons inside column overlap more than with receptive fields of neurons of other columns. Each neuron is also a fuzzy cluster, of its synapses, that’s how Hebbian learning works. It’s not just graph, you have to segment it into clusters.

It’s clustering all the way down :).

I can’t see that words like cluster, fields and fuzzy explain anything. The proposition put by this video is that columns are functional units: they execute an algorithm, they have inputs and outputs, and by being linked together into a tree structure they can support symbols and categories.

My suggestion is that this is not enough: they need to support modelling of reality, derived from sensorimotor inputs, projected forward and backward in time.

Columns are similar across mammalian species, but more columns correlates with higher intelligence. It would seem a simple evolutionary adaptation to increase the number of columns, provided the survival benefit outweighs the energy cost.

So the challenge is: what does a column do?

I don’t really buy the coffee cup, but yes, representing reality live as a tree structure of recognised objects in 4 dimensions is attractive. The fox watches the rabbit and predicts where and when it can be caught.

I just told you, it flew right over your head. Clustering is an abstract function, and you are not an abstract thinker.

Here is a different ÆPT perspective/take on consciousness: https://www.aeimcinternetional.org/the-aept-definition-of-consciousnes

It’s an interesting perspective, however I believe it is thinking in the external representation too much, compared to the internal representation. The reason why is “is a” is actually two different concepts and may well be even more strange as to how they actually are represented internally. We seem to think that the brain stores a representation closer to language than how I believe it works, which is far more temporally messy.

“a” is a singular type of concept and a concept that is separate to anything and means the same to any other concept. Temporally “a” and whatever follows need to be close enough to fire together, if not synchronously, not in a sequence chain like songbird memory fragments. A type of branching if you think of it spacially as a graph.

“is” in my understanding is actually a signal or guide to reduce the temporal timing proximity of the surrounding concepts and it does not have a concept on it’s own. My theory is that these temporal guides are learnt in the hippocampus as temporal moderators or amplifiers.

When you say X is a dog, the X and dog have closer neural activation timing by “is” learnt to closer correlate the outer concepts, in the same way that “not” may well be a concept and moderator. When you learn X is a dog, that needs a link, however Y is not a dog does not need a link as such, rather a distancing mechanism to avoid repetative Y is not a dog ending up with a weak synaptic build.

Y not dog

X is dog

“is” being one of the utterings to identify two concepts are correlated together without adding any other secondary conceptual context.

X bad dog / X is bad dog / X bad is dog (depending on word sequencing based on the language used)

Word sequence order in different cultures should also be considered with these models, especially when thinking about translation and conceptual understanding of the same concepts in the the same model invariant of language.

Songbird memory fragment sequencing is cortical column chains of the notes and a sentence may well be a comparable song. Dendritic lengths dictate the column to column activation interval representing a concept chain, however the timing is also encoded in the song. Where is the timing stored.

When thinking about HTM and the way that the activations map through, when looked at in a different perspective the processing is just many smaller memory threads, but in HTM calculated synchronously in bulk, with no temporal modifiers.

Time I believe is very overlooked in a lot of the models, however it’s challenging to think in a timing based HTM model with a dynamic temporal variation thrown in to add a different dimension to the base HTM onl the fly, sort of like the neocortex on it’s own having no temporal modifiers and then the hippocampus kicking back temporal adjustments. Adding an extra dimension to HTM.

Just some thoughts that may kick out some different ideas.

Science rules. I think you will do better to focus on the scientific examination of small mammals rather than attempting to introspect on human language.

I would expect a mouse to process visual and olfactory sensory input to identify a cat, human, cheese and water etc, put them into categories such as danger, food etc and act accordingly. The question here is do cortical columns figure in that process and how? And does a tree structure figure in that?

My graph continuous thought machine abstracts a text encoding and represents it as an abstract graph with learnable property vectors. At each tick another graph is produced and the model searches graph space for solutions using learnable property vectors to guide it. This module both occupies space and time.

The if-except-if tree concept is useful and goes from the general to the more specific.

If the current letter is ‘a’ then the next letter is ‘n’ except if the previous letter is ‘h’ then the next letter is ‘t’ except if…

Then ‘ma’ gives ‘man’, ‘ha’ gives hat.

You are just using an ever widening context until you run out of specific exceptions.

And that is to go from the more general response to the more specific.

It is kind of alarming that neural network LLMs may be enacting such a mechanism in an extremely inefficient way. And that 1 GPU would actually do.

Anyway I can’t help these people, they are beyond help.

I don’t see how this would generalise across modalities? Aren’t there an arbitrary number of attributes a subject can have? One could easily get stuck with the frame problem

Science does indeed rule. I don’t really favour introspection without playing around modeling with a few GB of language samples to actually test out part of the theory. There is more to what I mentioned than a pure theoretical hypothesis because part of the way the majority of well known languages are structured show up with the same temporal patterning effect (in results, not a hypothesis). Stopped the research work because life got in the way and hoping to restart again.

Cortical colums in the way that HTM is coded and works is a type of memory fragment approach when looking at the winner takes all paths. How those paths are achieved as an encoding is possibly more complex than needed because biologically we approach the problem from a connection first (bio) and not connection last approach (digital). What I mean by that is we start of with a brain wired with many plausible paths because we can’t biologically wire the paths retrospectively. Connection last is where we just create connections only when required and is a concept of the approach in the original posting in this thread, which is interesting but missing some obvious aspects.

The spacial relationship aspect of cortical columns also keeps on calling in the background to upset everything and draws the colums back into a multi dimensional node rather than just a simple concept node. This is something that weighted towards pausing my prior research as well.

Just trying to create some different ideas because I’m quite convinced that we are missing what will typically turn out to be hugely obvious in retrospect. I might not have the ureka moment, I just hope someone does while I’m still alive to see it.

Not trying to over think this, but a mouse knows perfectly well how to recognise cat, fox, cheese and water without putting names to them. Language is a layer on top that conveys little about how basic animal intelligence works.

IMO the key higher brain activity is the ability to observe, predict and react to the real world by constructing models. A mouse sees a cat and cheese and can predict and react. We don’t know how they do that, but we should try to find out.

The best candidate for these functions is the cortical column, and the repetitive structure suggests a few core algorithms, and a common data structure, like parallel computing.

SDM offers the SDR, which is a good candidate for a data structure. Thousand Brains offers a number of features that could support models and parallel processing.

For now, this is where I would be focusing effort.

No one uses handmade features anymore like SIFT. Maybe there is a place for them.

Looking at a paper about Gaussian noise I noticed talk about higher order correlations.

If you multiply together 2,3,4… random projections together that would ‘bring out’ the manifold the data the is located on in a sparse way.

The product of multiple random variables from the Gaussian distribution have a spiky distribution.

Will I code that, won’t I code that? I don’t know. I do have in mind human designed feature detection as an input to an extreme learning machine.

However what I find with any kind of neural network or assocative memory is if you take away information about the input you get less good results.

Nearly always it seems you are better off giving the neural network the raw input, rather than any heavily processed input.

I might leave the topic on the back burner or I might look at it.