Maybe you don’t have to be so detailed in these simulations. The agent (to start with) only needs input in the form of semantically encoded representations of the environment and some semantically encoded behaviors to learn how to manipulate the environment. To start out, so that it’s simple, the environment can be fully observable.

My theory is - constrain the environment and interface as much as possible to be simple and generic. We can think of the behaviors available to the agent to be ‘movement through the space of all possible configurations of the fully observable environment.’ If we constrain the environment to fit that mold, and therefore the interface to fit that mold, we can quickly iterate over SUI-mind-structures to find the ones that naturally learn to control the environment.

Once we find a generic structure that manages all types of fully observable environments we can then move to partially observable environments and grow minds that have added capabilities for layers of abstraction and inference. Hopefully that ends up meaning we must only refine the SUI model we have to allow it to form minds of many more layers, rather than find an entirely new SUI structure.

I have started a project to express this framework in code. Once it is good enough, I’ll share it.

A word about rewards. I don’t actually think rewards are necessary to worry about, at least, not in the beginning. (Can you tell I love dropping requirements? I want to reduce complexity.)

The framework I have in mind would allow the agent to explore the environment at random, just as a new born baby flings their arms about at random, and by so doing, by feeling what occurs and learning what behaviors have been performed, begins to learn how to control them.

The SUI should at least naturally build a mind that associates behaviors with similar and consistent input patterns. (If we can’t get a structure that produces a brain that does that, then this idea is too immature and the state space isn’t manually constrained enough).

After randomly exploring the environment for some short period of time we (or the deep learning supervisor) should be able to give it a task. Now, what does it mean to give it a task? It means implant an idea of the desired input representations at the top of it’s hierarchy; it means activate a pattern of neurons across it’s highest layer that we would like it to produce.

The hierarchical-sensorimotor nature of the SUI-produced brain should cascade that pattern down the layers of the mind producing predictions of seeing that pattern in the input data. These predictions necessarily imply seeing the learned behavior that would bring that input pattern about. In other words, communicating goals to the agent is merely a matter of manipulating the top of the hierarchy.

And the only reason we’d do this is to test it’s knowledge and ability. This is how we gauge how well it knows the environment, and therefore how we score it and know which structures ought to live on.

This means the supervising narrow-intelligence must watch the brain closely, identify the top layers and associate manifested patterns in them with particular locations in the environment space, only then can it choose locations the agent has been to before and test the agent’s ability to find it’s way back to those locations again. (There may actually be an easier way than this, one where all you must give it is the actual sensory input patterns while the network is in a special sate like a dream, but I’ll talk about that later when I think I’ve figured it out).

In this way, I believe engineering rewards, and reward networks to some how be integrated into the mind of the SUI-generated brain, and managing action selection are all unnecessary concerns. Especially since, just as in real life, each possible action, from the environment’s point of view, lies along a semantically encoded spectrum.

This seems to me to be a necessary initial capability. There has to be something to drive the system to choose one action over another, or to take any action at all for that matter.

That said, it probably wouldn’t be necessary when creating the initial building blocks (you could hard code a lot of the functions when testing a given component outside of a fully connected “critter”)

BTW, this isn’t overly complicated to create something basic. I have a test which combines encoded emotion as part of an “object” representation. Inputting a given emotional state will predict matching objects. I’ll post a demo of this. I think it could be expanded into a basic system where emotional flavoring of models can be matched with needs for action selection.

Somehow it’s reward function then, needs to incentivize the building of a holistic world view. It should find a balance somewhere between curiosity, novel input and boredom, perfectly predictable input. It just seems to me to be something that should naturally flow out of the mind structure itself.

I have a half-baked idea for a reward function for curiosity. I will admit it’s kind of sketchy but I imagine that the agent will have some sort of mental space. You could have some need to fill or extend this space with items orthogonal to the current contents - a desire to learn something new.

When I say orthogonal what I mean is referring to the 2D nature of how memory is mapped in the cortex. In whatever the axis’s the particular map holds - areas that are not stimulated by a new memory (surprise) will want stimulations.

I have stated elsewhere that perception is active recall and that you learn what is surprising - the reward is proportional to both the amount of surprise and the amount of memory engaged in the recall.

The critter should want to examine it’s environment and learn new things about it, expanding it search space. If you balance that with programmed forgetting you should be able to establish some fixed territory.

I did say this was kind of sketchy?

This could be in some way tied to attention. For example, attention could be drawn not only to the most anomalous areas, but also to areas of the active mental model which have not been enforced by sensory input for a while.

I like the analogy and the specific questions.

My thoughts in relation to your analogy and the OP’s idea is that, imagine a trained neural network with acceptable performance, forget/remove the structure of the neural network. This can also mean we are left with a nueral network without hidden layers, only the input and output layers are left. Now the big question is which structure provides the best performance given the inputs and outputs? The problem can then be firstly constrained to a smaller set of rules, such as how neurons connect to each other and how they interact to simplify the problem and make it tractable.

The answer of the big question will be the solution which hopefully can be different SUI structures. As we imagine the problem space can be huge. Note that this is just an example for a NN, one can mix different structural rulea such as in HTM.

edit: Forgot to mention that weights are also left besides the input and output layers.

Are you going to start with some kind of vision as a sensor part of the agent? Is the idea that a cortical-like version of the vision will evolve by itself, or are your going to use, let’s say, CNN?

Well whatever you do, whatever scheme you think up , you better make O(nlog(n)) or better.

With O(nlog(n)) everything can run nice and cool, and very fast.

With O(n^2) (ie. current neural networks) everything will run boiling hot and pull megawatts of electrical power

Well idk. Here’s what I do know, we want to constrain the search space as much as possible, so that we are truly isolating the thing we are searching for: the SUI structure.

I think that means the environment must come already semantically encoded. We can’t be evolving ways to encode the environment’s state representation at the same time, we aren’t as good, or as parallel, as nature.

So the framework I have in mind is a step-wise system, (as in time occurs in steps) such that in each time step the agent being trained and tested will send its output behavioral representation to the environment simulator which will take that representation, determine its effect on the environment, and then return a new semantically encoded representation of the new environment’s state to the agent. Then the next time step occurs in which the agent decides what top do in response to the environment’s current state.

That way we don’t have to concern ourselves with the need to give them vision, it’s just part of the simulation. “Organs included, some assembly required.”

Such an approach looks quite suspicious to me. There isn’t semantics outside the representation inside an agent. If you construct your own semantics and feed it to the agent, how do you expect it will build its own model of the world?

The result of processing any sensory modality is much more than just a set of labels (or whatever you see as semantic representation). Especially if we are talking about vision, which is up to half of all processing in the neocortex.

If you are thinking about getting cortex-like processing as the result, it’s useful to think about it (or any other modality, but it’s cheating if the approach doesn’t work for vision) as the primary thing the agent has to build.

Part of what the SUI must be capable of is distilling semantics from the world it finds itself in. This requires a lot of SUI’s working together to bring separate inputs together to form associations, based on how frequently they occur together.

When I hear or read the word “cat”, speak it, hear a meow, browse photos of kitties, etc. These all are very different inputs, but my cortex has come up with semanticly relevant encodings that roll up as part a higher level concept.

The videos that Cortical IO has made about their “semantic folding” process gives a good idea about the semantic encoding goal that the system should be trying to accomplish (though obviously a network of SUI’s would need to use a very different/ more general algorithm to achieve that goal)

Actually I remember us discussing some time back about universal encoding and how semantic folding might be applied to that problem. Those experiments might be worth digging up again.

It’s important to distinct pointing something and its semantic representation. The latter is a structural-functional model, which is the basis of meaning, not just mapping of labels.

It’s understood pretty well for about 2400 years but successfully ignored by most of DL community. I hope not in the HTM community ![]()

I don’t quite understand what you mean by this, can you clarify?

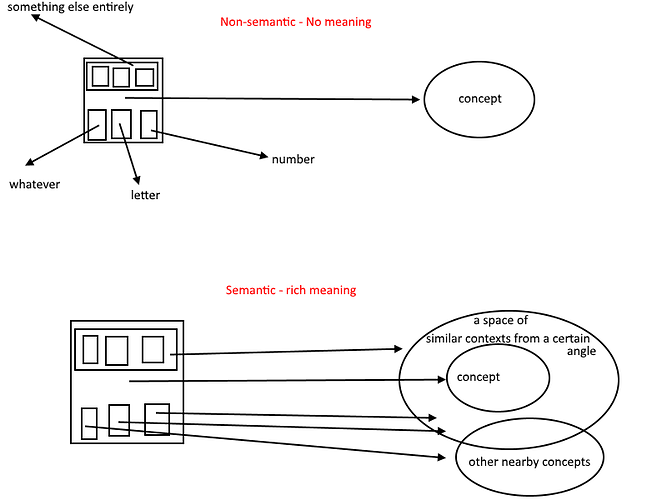

You mean to say pointing at something is a reference, its just addressing and has no meaning?

But a semantic name of something has meaning because, even though its merely a reference to the concept as well, each part sub part of that reference also points to nearby concepts?

I suppose I’m working off the assumption that all representations are mere pointers or addressing of information…

Here’s the image that comes to mind when I try to make sense of your statement. Is this right?

I don’t quite understand your point. In HTM, what I am referring to above as a “concept”, in its most rudimentary form, is a collection of active bits. Most/all of those bits are also used in other concepts in varying quantities, depending on the overlap in shared semantic meaning.

When I am speaking the word “cat”, presumably some of the cells in my brain that become active during that process will also be active when I see a picture of a cat. Other cells that become active during that process will presumably not become active when I see a picture of a cat, but maybe will become active when I speak the word “bat”.

WRT language, this paper is quite relevant. It points to sensory motor mechanisms being at the core of forming language semantics. If your point is that a word does not share semantics in its representation with the representation for the thing that it labels, this would seem to counter that argument.

A UCLA and Caltech study found evidence of different cells that fire in response to particular people, such as Bill Clinton or Jennifer Aniston. A neuron for Halle Berry, for example, might respond “to the concept, the abstract entity, of Halle Berry”, and would fire not only for images of Halle Berry, but also to the actual name “Halle Berry”.

BTW, I should point out that I am not making the case for “grandmother cells” here. The representation for an abstract concept of “grandmother” will consist of many active cells in many different contexts. Most of the cells in such a representation will not only be active for the concept of “grandmother”. The majority of the cells will also activate as part of representations for other abstract concepts which share semantics, such as “elders”, “Christmas cookies”, “nursing home”, etc. depending on the specific experiences which built the “grandmother” concept.

There will be a diminishing percentage of cells in the representation which have receptive fields that strongly match the one specific concept but weakly match any other concepts. You could consider only those few that breach some minimum threshold to be “true” grandmother cells (i.e. almost never activate as part in any other representation). However killing off those cells would not result in you forgetting about Grandma.

It’s just not enough to have external links to some labels. You need to organize all components somehow inside the meaning, and it won’t be just links ether. Because there are more important and less important components, optional and mandatory, reciprocal or directionally related, the components exist in different cognitive frames, etc. Look, for example, at some examples from cognitive linguistics to get some ideas about it.

Right, but HTM doesn’t say much about what these bits are responsible for. That’s where you have to look at it from the perspective of cognitive science if you are going to build an agent with the AGI abilities.

What you are talking about here is a statistical representation of a phenomenon, it’s helpful to some extent, which has been demonstrated by DL community and cortical.io from on HTM side. However, it doesn’t cath meaning of the phenomena, which needs a representation of everything you have in memory about your grandma as a kind of schema which supports causality, constraints and other parts of intuitive physics (in the broad meaning of it).

You mean temporal information? Yes, that can be compressed into spatial encoding and then unfolded as needed.

Why only temporal?