Abstract

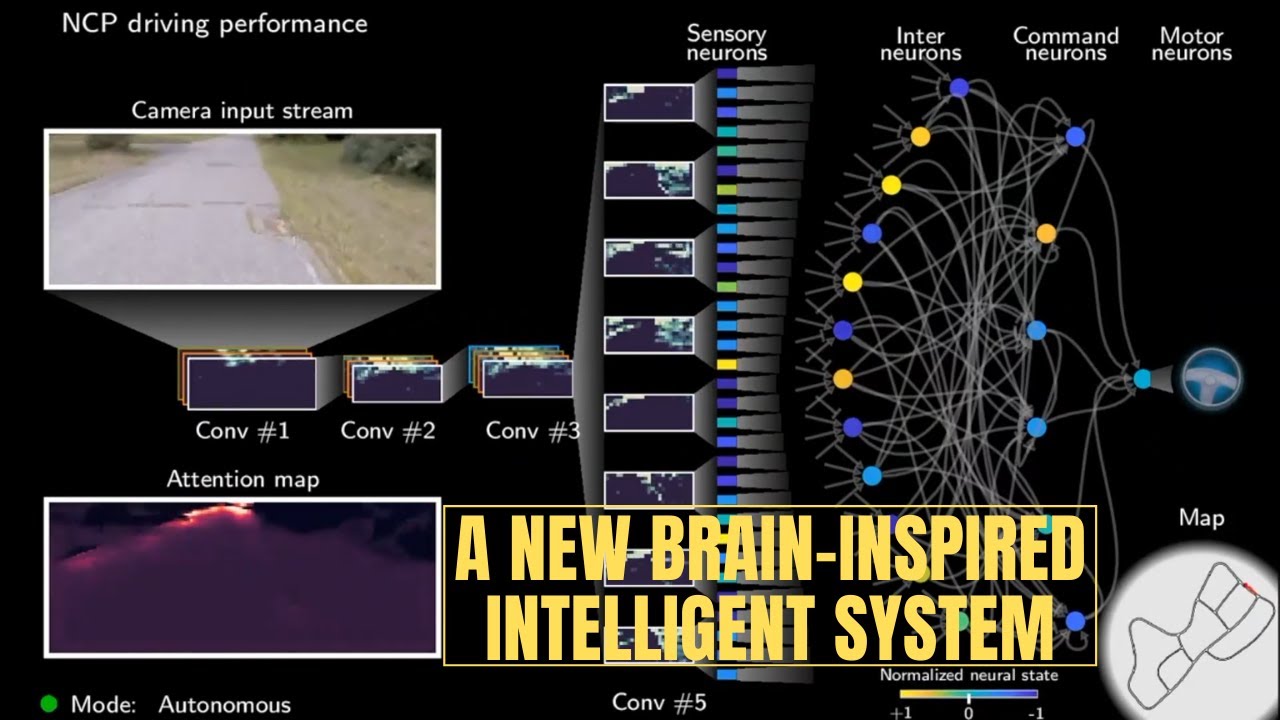

A central goal of artificial intelligence in high-stakes decision-making applications is to design a single algorithm that simultaneously expresses generalizability by learning coherent representations of their world and interpretable explanations of its dynamics. Here, we combine brain-inspired neural computation principles and scalable deep learning architectures to design compact neural controllers for task-specific compartments of a full-stack autonomous vehicle control system. We discover that a single algorithm with 19 control neurons, connecting 32 encapsulated input features to outputs by 253 synapses, learns to map high-dimensional inputs into steering commands. This system shows superior generalizability, interpretability and robustness compared with orders-of-magnitude larger black-box learning systems. The obtained neural agents enable high-fidelity autonomy for task-specific parts of a complex autonomous system.

5 Likes

7 Likes

There is a cause and effect article on Neural Circuit Policies here:

https://techxplore.com/news/2021-10-ai-agent-cause-and-effect-basis-task.html

https://arxiv.org/abs/1803.08554

I’ll read up on it a bit. Self modifying code was a thing in the 1980s before pipelined CPUs, you can make self modifying neural networks.