Thanks for sharing this. Very interesting and relevant learning on this topic.

Thanks for the kind feedback everyone. Currently crunching numbers to come up with biologically plausible mappings from retina to LGN, and from LGN to V1. Biology is messy… and no two accounts agree on size, shape, or even function.

What’s the problematic, you ask ?

Here is the V1 retinotropic map (ie local associations from visual field to cortex) of a macaque monkey

Here similar stuff for a human

Scaling aside, I’m settling on an LGN “sheet” which will have similar topology as V1. So that this distortion seen above, giving huge coverage for fovea compared to periphery, will already occur on LGN.

I’m settling on a count of ~2M “relay cells” (will have to await further neuro theories to see if they are more than “relay”) per LGN on one hemisphere.

There are 6 main “layers” to LGN. The four dorsal layers are for Parvocellular (P) cells, which are assumed to relay signals associated to so-called “midget” RGC (tiny sensory area, sustained responses). The two ventral layers are for Magnocellular (M) cells, which are assumed to relay signals associated to “parasol” RGC (large sensory area, transient response). There are also 6 intercalar layers between each, comprised of Koniocellular (K) cells… which were found relatively recently and are poorly understood. But I’ll try to model those also, anyway.

Of these 2 millions, some sources claim ~80% P, ~10% M and ~10% K. So I’ll settle on this.

Since there are two layers of M, one for each eye to the LGN (each LGN operates on contralateral visual hemifield), I need to work with it as a base, setting each “point” in visual space to two M cells. Thus according to P/M ratios, each point is also 16 P cells. Given those values, I believe P sheet will be distinct in scale from M sheet. Let’s say by a scale factor of 2. So per 1 point on the M topology, we’ll have 2x2 points on the P topology. So there will be 4 cells per point on the P topology.

Thus how many points total in the P sheet ? one fourth of 80% of 2 millions, ie. 400 thousand.

I think I managed by now to find some mapping from retina to such a sheet which makes sense, still using hex lattice as described above. I’m able to fit a similar shape of about ~400,000 points, with similar retinotropic properties, in a “square” (in term of indices) hex-layout of 655*655 = 429,025 positions, thus achieving 93% coverage of the regular grid with meaningful data.

I find this 655*655 value almost surprising. This is not a staggering resolution compared to most “images” we routinely work with on a computer. This is a clue that high-res center of fovea and integration of saccades are paramount in our visual perception.

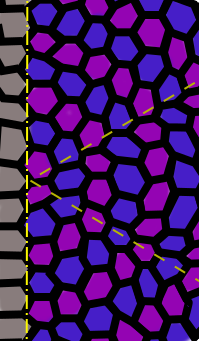

Next hairy topic to tackle is LGN to V1, with axonal “setup” of ocular dominance, resulting in a zebra pattern. Here’s what it should look like:

Top are ocular dominance stripes (one eye black, the other white) as seen on macaque V1, bottom same thing on a human one.

Black area at the periphery is due to the fact that a single eye manages to reach up to the full field of view on each hemifield (this is called the monocular area). White area in the middle is location of the “blind spot” associated with ocular nerve (but I don’t think I’ll model this last one).

I have ideas to iteratively simulate this organization (once at startup) from the CO “blobs”, which in my opinion seem to somehow hex-grid (again !), right in the middle of those stripes.

Will try to come with figures and more home-made diagrams.

Gary,

This book is really great.

Many thanks.

Some of the visualizations I promised…

There are some accounts of different P-to-M ratios depending on eccentricity, so that would contradict my presented scheme above… although I can’t get reliable figures, everyone had a different opinion about that some years ago… now nobody ever cares. At least for papers which I can find on the good side of paywalls.

Nevermind, let’s stick to it.

First, there’s my indented mapping, looking like the retina cones layout around foveola (very tiny part of fovea with highest cone density, ~50 per 100µm)

This is in fact a regular hex lattice which has been reprojected to concentric circles and salted with some random variation:

An hemifield is mapped here (grey tiles are part of the other hemifield, vertical yellow line splits the two). You can see on this picture that despite their being irregular looking, number of tiles per concentric circles grow at a steady rate, first “circle” (pinkish purple) of two tiles, second one (bluish purple) of five, then 8, then 11, then 14, then 17… increasing three by three. dashed yellow lines split the “pie” in 60° parts, on which the regular hidden “hex grid” is most perceivable.

On foveola, there is believably, rougly one OFF midget and one ON midget RGC per cone, thus both will get associated to one point in the P-sheet topology (and the other eye provides the remaining two). So those tiles will get mapped as such :

Foveola spans to roughly 0°35’ (35 arcminutes) eccentricity, that is about 0.175mm in distance. So with 88 of these circles at around 2µm per cone, we’ve roughly reached that value, which is the limit of the foveola on each side, after which the tiling will start to get sparser.

So I set that value of 87 steps (spanning 263 points) as one ‘chord’ to my regular hex lattice, and I get to this :

Each line of vertical indices after foveola (I don’t want to use the word ‘column’ here to avoid confusing terminology) is spaced with its neighbors in the eye following a geometric progression (in eccentricity) after a few smooth-matching steps. That’s why those values grow so rapidly to 90° after some point, which is consistent with what our brains seem to be doing, and the overall potatoish shape is not much weirder that some of the messy pieces of V1 we can find a picture of. We’ll get a steady increase in number of RGC per such “line” up to about 3 degrees, then we’ll reach a plateau, and eventually fall off from 16° onwards.

In total, there will be 11,660 points in the foveola region alone, on an overall total of about 400 thousand points still (each associated with 4 midget RGCs, give or take weird things and monocularity happening at periphery).

Interesting stuff, folks. The next HTM Hackers’ Hangout is today at 3PM Pacific time. Anyone participating in this thread is welcome to join and discuss these ideas there. You can even share your screen if you want to show graphics. Is anyone interested? @gmirey @Paul_Lamb @Bitking @Gary_Gaulin @SimLeek

5 posts were merged into an existing topic: HTM Hackers’ Hangout - May 4, 2018

Thanks for bringing that topic up on the hangout.

Mark expectations of working code seem high ^^ I hope we can deliver.

At any rate, the heavily localized disposition of V1 cells in sensory space has forced us to ponder about topological concerns at length. We’ve been discussing those concerns with @bitking in PM for some time, right from that failed joke which did not quite land where I wished.

So even before writing any code it was clear that there were topological problems to tackle. And now aside from that V1 project, I’m glad that some of what we came up with for topology seems usable enough that people like @Paul_Lamb are starting to toy around with it and see how it fits on the overall scheme.

Some musing - I have been thinking about how your model will learn edge orientations.

Thinking biologically - It occurs to me that at several stages in the formation of each map one organizing principle is the chemical signaling creating “addresses” and gradients in a given map. Sort of a chemical GPS.

At one point in early development the sheets double up and split apart and when the maps end up in whatever they go, some cells in the source map form axons that grow back to the sense of “home” in distant target maps thus retaining a very strong topological organization.

This is amazing to me - if a cell body was the size of a person the axon would be the size of a pencil and perhaps a kilometer long; It still finds its way to the right place in the distant map.

The gradients part is a chemical marker that varies from one side of the map to the others. There could be far more than just x&y. Obviously, an x&y signal will form a 2d space. Smaller repeating patterns would be like zebra stripes. Nature is a relentless re-user of mechanisms. Thinking this way, look at the ocular dominance patterns. I can see the same mechanism as being a seed to the local dendrite & synapse growth.

What I am getting to is that some heuristic seeding may take the place of genetic seeding outside of pure learning in the model. I would not view this as “cheating” but just the genetic contributions to learning.

What does that mean?

In a compiler we think of the scope of time: the reading in of the header files, the macro pass, the symbol pass, code generation, linking, loading and initialization, and runtime. Even that can have early and late binding.

All this is different with interpreters. You might think of sleep as the garbage collection of a managed language.

In creating a model we may think of different phases to apply different kinds of learning.

Ocular dominance stripes are indeed a matter of axonal wiring (That’s why it is an initialization phase in the model where axons are pre-wired), while formation of edge detection cells is visual input dependent. Evidence of this comes from lesion studies at birth. If one eye is missing, the LGN axons still have formed stripes of ocular dominance on V1 the regular way, but the cells within the nominal “stripes” of the missing eye would finally twist and rewire their dendrites towards remaining eye input.

As for how axons do find their way to precise positions I don’t know. An analogy could be… hmm… Despite being individually somewhat dumb, ants also can still find their way back following chemical tracks. Maybe the Cell Intelligence link by @Gary_Gaulin (somewhere on the other thread) could answer to this.

For the model, when I say I have ideas to do this iteratively, I believe a setup of CO blobs and “edges” between them could serve as attractors for neighboring arbors, with some mutual repulsion between one eye and another, and some form of “too high density” repulsion to get the stuff nicely distributed everywhere.

Yes!

And Q*Bert - 3D:

http://keekerdc.com/2011/03/hexagon-grids-coordinate-systems-and-distance-calculations/

@Gary_Gaulin: as alternativ explaination and software implementation you can see at

I agree, I should have posted both links. The Red Blob Games website is the one I most needed for my hexagonal math related projects, and ironically I was back again while searching for the Q*Bert link.

It seemed best for me to not provide an overwhelming amount of information. But I could have added a second link, as an additional resource.

The Q*Bert illustration alone shows how easy it is for our mind to perceive a normally flat looking hexagonal geometry as stacked cubes with 3D depth. The 3 coordinates adding up to zero may provide a clue as to what is happening at the neural circuit level. It’s also possible that the two are unrelated. But in this case the math process seemed worth keeping in mind, while experimenting.

Fine that you use it, I found it very interesting go me too. I am trying to find a way to use this lib with nupic api

Haven’t progressed much in the code architecture yet… still reviewing lots of papers concerning receptor counts and RGC counts as functions of eccentricity[1][2], and trying to imagine something that will make sense for the “rendering” part of the input.

Originally, I envisioned several render targets for an eye, each as your everyday square or rectangular textures. Each would render the incoming image as pixels, at decreasing resolutions. So you’d have one render pass giving very large resolution for the very tiny visual field of inner fovea, then one with almost-same pixel count, but “shooting” at a larger visual field, thus a lower precision, and so on all the way up to full span for the eye.

There are a number of issues with this, though.

- First is that the decrease for density of receptor themselves is… I don’t know how to mathematically put it, but let’s say it’s decreasing at a slower rate than eccentricity itself. So at the end of the day, the required texture sizes to cover a given visual field would still get larger and larger, away from fovea. And it gets big quite fast, finally (Note that I’m speaking of receptors here. At the level of the retinal ganglion cells taking input from them, we’d be back to a more manageable, almost 1 for 1 decrease with eccentricity on the log-log scale).

- Second is that the planar projection does not give justice to far periphery, which is projected on the eye sphere, so a given spacing in eccentricity does not translate to a linear increase in spacing across meridians.

- Third is that my own neurons are getting tired, and I can’t seem to be able to tell on which side I should apply the required sampling rate equations to avoid Moiré effect when considering the receptors as “samplers”.

First and second issues would be the “progress report” part of the post, since I guess I will be able to hammer at them. I’m currently planning to have some distortion applied at the raster level already (much like the barrel transform idea of @SimLeek in the early days of the topic), dividing the field of view in quadrants (or even 120° parts) for eccentricities up to 24°, then starting kinda cylindrical or conic projections on an outer “band” texture for far periphery.

For the third issue, this is more of a cry for help : I have, at receptor level, already two sampling operations happening :

- One is the sampling of the continuous “external world” as rasterized pixels, which is a quite fixed operation on GPU.

- Second is the sampling of the rasterized pixels themselves, which ought to be a simulation of actual receptors receiving light input (approximately disposed on a hex lattice with maybe random perturbation).

And I was thinking, since there is a potentially problematic change of lattice here… with possible interference patterns showing if not cautious, that the input texture would need to be at higher resolution than the receptors, to overcome those effects… and that seemed obvious to me, until I started doubting it was the other way around…

So if anyone here is used to the notion of Nyquist frequencies, do I have in fact to decrease the resolution of the rasterized image, for it to be correctly sampled by simulated receptors ? Increase it ? Or let it similar… or even, it doesn’t matter, as long as we apply an appropriate anti-aliasing filter pass ?

[1] Human photoreceptor topography - a 1990 classic by Curcio et al.

[2] A formula for human retinal ganglion cell receptive field density as a function of visual field location

http://jov.arvojournals.org/article.aspx?articleid=2279458

Seems like some genius decided that an MIT ‘OpenCourseWare’ was not to be ‘open’ anymore.

At least from where I live…

@!%$$µ***!0@ !!!1!!

[edit] oh… well, forget about it. seems like it was more of a technical issue. Misleading message from Youtube though. Seems fixed now.

If it makes you feel any better - I get the same thing in Minnesota.

There are a lot of interesting thoughts from morphology perspective here, but what about principals behind whall this structure? First of all, how do you see a realization of invariant representation of visual patterns in your model?

It seems a bit much to ask at this point.

The level of description at this point is of a single fragment of cortex with the possibility that at the association cortex there is a stable representation.

This is at a distance of at least 4 maps away from V1.

The WHAT and WHERE streams are surely involved in these representations and are rightfully part of the answer to your question but they have not come up yet in this thread.

In private discussions with @gmirey we have been thrashing through these levels of discussion, in particular - the 3 visual streams model and that level of representation. Tackling the system level issues are an important part of the visual puzzle but perhaps this should be in its own thread.

Hi ![]()

If I had to write the synopsis of this project at that point, that would be:

- try to model current knowledge about retina/LGN/V1 as precisely as possible

- by exposing the model to visual stimuli, try work out some of the missing parts of V1 architecture and learning rules, towards expected responses (simple cells, complex cells, color blobs…)

- hopefully get a clue of what kind of information are cells interested in for various cortical layers, and possibly parts of thalamus role as well.

So, I don’t have an a priori result leading to invariant representation. But I’m hoping to get an understanding towards it.

@Bitking @gmirey I think any new digging in this direction can help to find some useful insights, nevertheless, the risk of losing some tiny but crucial details is too high. It’s like an attempt to reproduce a car having all parts but spark plugs: if you have an idea of an internal combustion engine, you can find a way around or even invent something better, but without understanding the principal you can spend eternity trying to make it work having it ready for 99,9%.

Plus, retina/LGN/V1 is not the most interesting/important part of the story. You can use an absolutely different visual input and edges processing and everything else will be working just fine.

I don’t want to discourage you from what you are doing, but imagen you successfully did everything you planned - what would be your next step? It’s unlikely this part will give you sufficient basis for going further.