I already posted in another thread but here is probably the correct place.

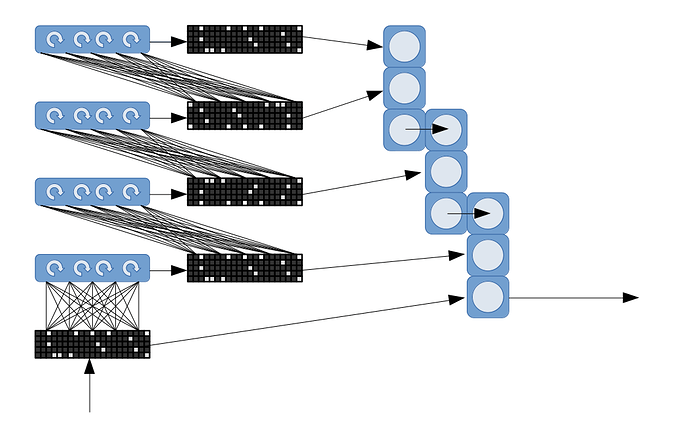

I implemented a binary version of the triadic memory that compresses the synapses down to single bits in a dense array. so it takes up 8x less space in ram at the cost of precision in the weights…

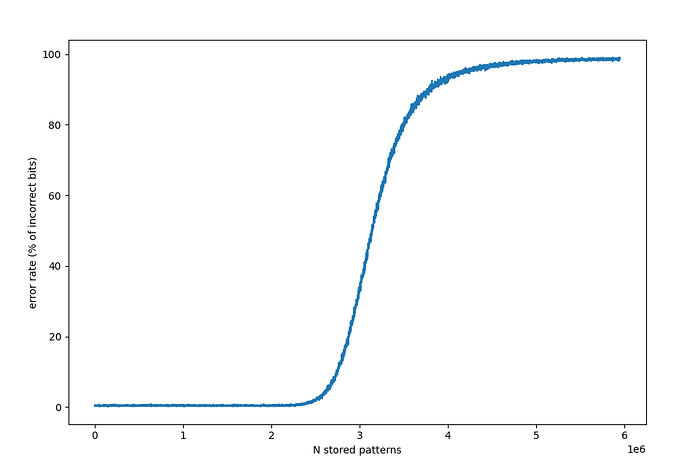

I made a plot for the retrieval error rate of random triplets as a function of the number of stored triplets on a N=1000, P=10 memory.

it starts degrading noticeably at around 2.5M stored random triplets.

this implementation takes up 125MB in RAM for N=1000.

assuming you need at least 300 bits to store a triplet in raw address form, 125MB is enough for 3.3M triplets so a binary version of the triadic memory has about 75% theoretical storage efficiency.