I’m happy with specific encoders for different data types.

And even with variable/controllable encoders for the same input channel.

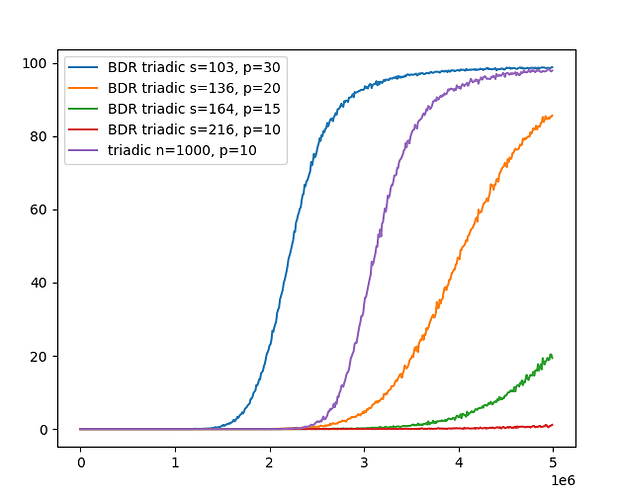

I did some experiments with a variation of the triadic memory.

this variation takes as input what I call BDRs (Block Distributed Representaitons), which are essentially like regular SDRs but with constrained in blocks where each block can only have exactly one one bit.

here I’ll define S as the size of each block and P the number of blocks.

BDRs allow for regular bit pair addressing but also allow for a constrained version of it where pairs are computed per block, so the number of output addresses is equal to the number of blocks.

A BDR memory takes up S³ * P² space which is less than the SDR equivalent so it has less capacity, but we can make up for it by tweaking S and P so the ram usage matches a SDR memory.

here are the results of the tests I did (note all those curves are from memories tweaked to take up the same amount of space in ram)

I am not sure what to make of those results.

I have no idea how to use such structure but it is interesting too.

At first sight all of them can be represented as dense int vector of size P with values between 0 and S-1

This kind of data is pretty well indexed with “classical” approximate near neighbor (ANN) indexing, but how and in what sense that compares to your structure is hard to fathom.

well, you can use this structure as a regular associative memory, I was just exploring different ways to compute the address locations, this method seems to work just as well as the regular triadic memory for some choices of P and S but the effective SDR size tends to be larger.

it is exactly what we want to find a general encoder, but I still hope.

In HTM, SDR of two neighbor values have to share one common bit, but I believe that Triadic Memory does not require it.

Do you know about the minimum number of bit overlapping by TriadicMemory?

It’s all a matter of collisions & distribution there can’t be a minimum overlap rule.

e.g. a pair of overlapping bits is sufficient IF there-s no other overlap as “interference”.

Or all bits overlapping might be insufficient if the initial data is old&overridden by more recent writes or the memory is saturated and cant retrieve

Is nothing magic there, writing a X,Y pair of P=10 bit sdrs into triadic memory just writes the same value of Z at 10x10 = 100 memory locations. Writing random SDRs as you see the charts above, has little chance to mess up things till a critical amount of data is stored.

By the way, I did a test of storage capacity for the byte triadic memory and am a little surprized.

the byte triadic memory can actually store more data than the binary version indeed, but @POv said that you can store about one million associations before it fails.

and its true that the first failure happened at around 1.1M for my test but it was just a single bit, I’d brush a single bit off as irrelevant. it took 4.2M writes for the error rate to cross the 10% mark which would be equivalent to 1 bad bit out of 10 on average, which I think is still fairly low.

it seems like the binary version does have a sharper cuttoff for its failure while the byte version has a graceful degradation failure more.

so what is the criteria for “faliure” you guys are using here?

I’m back.

Just did a test for a N=2000, P=10 binary instance, which uses the same RAM as a N=1000, P=10 byte triadic.

It went past 5M writes without a single kink in the error rate curve. its dead on 0.0% error.

I’d run the test for longer to see when it fails but I got bored, I’ll leave it running for longer once I have some movie to watch while waiting.

Capacity should be proportional with (N/P)**3

if so then the capacity would be around 16M writes?

in that range I guess. eight times bigger than whatever 10/1000 capacity is.

Intuitively the overall space is 8 times bigger while P remains 10

The latest C implementation (available on GitHub) of Dyadic Memory and Triadic Memory is now based on 1-bit storage locations.

This reduces memory consumption to 1/8, compared with the previous version which was based on 8-bit memory counters.

In Triadic Memory, the query performance changed: Querying for z became somewhat slower, while querying for x and y is now much faster.

An advantage of 1-bit storage is that the same association can be stored again and again without changing the state of the memory.

I’ve archived the original implementations as “Version 1”.

Thanks to @JarvisGoBrr for your discovery that 1-bit storage is feasible!

I just checked my implementations, and found that Z queries are 5x slower using a bitvector for storage, but X queries are 2x faster, and Y queries are 3x faster, but Z queries are still the fastest, about 1.5x faster than Y queries. Writes with the one-bit storage are a little faster (like ten percent) than one-byte storage.

However, it’s much better about storing sequences, around 4x better recall for forty sequences of fifty tokens vs. the byte-storage sequence memory.

There should be room for optimization – the slowdown in z queries was less than 2x in the C implementation.

well, I only discovered you can use single bits because I already had some code made to optmize binary sparse matrix projections.

I havent done any benchmarks to compare with other methods but basically I stole this trick.

essentially you get the position of the set bits directly without iterating. you can iterate directly over 64 bit words instead of bytes and skip all the zeros.

it does leave a small amount of unused padding bits for some values of N but that’s a small price to pay.

also another thing to consider is that this way, the performance is proportional to sparsity, so it will slow down when it starts to run out of capacity

in pseudocode it looks kinda like this:

z_col_size = ceil(N / 64)

for x in sdrx:

for y in sdry

zcol_start = (x + N * y) * z_col_size

counter = 0

for i in range(z_col_size):

tmp_word: uint64_t = bit_storage[i]

while tmp_word:

rightmost_bit = (tmp_word & -tmp_word)

tmp_word ^= rightmost_bit # clear bit

otuput_sum[counter + bit_position(rightmost_bit)] += 1

counter += 64

Our implementations are nearly identical in terms of address calculation and access patterns, and I still went from 50k q/s for Z to 10k, for 1000/10 N/P SDRs. I’m not mad, 10k q/s is plenty fast as far as I’m concerned ![]()

Ah, I now understand why couldn’t do X or Y queries!

to be honest, I believe you can, I’m just lazy, @POv is already doing it in his implementation, I don’t think replacing uint8_t with uint64_t will make much difference for his code.

Oh, you can in general; @POv and I are doing the same thing in terms of how queries are done. I just replaced my backing store with a packed integer vector that supports general indexed access to individual bits, ie, src/tri_mem.rs · main · Joe Ardent / triadic rust · GitLab

I disabled some bounds checking in the storage access and picked up a 2x improvement in the Z reads, along with moderate improvements in X and Y reads. Z reads are still about 2.5x slower than they used to be, but this is still way more than fast enough.