Thanks!

The input for the network is patterns for different instruments, the chord intervals are specified separately (not learned … yet  )

)

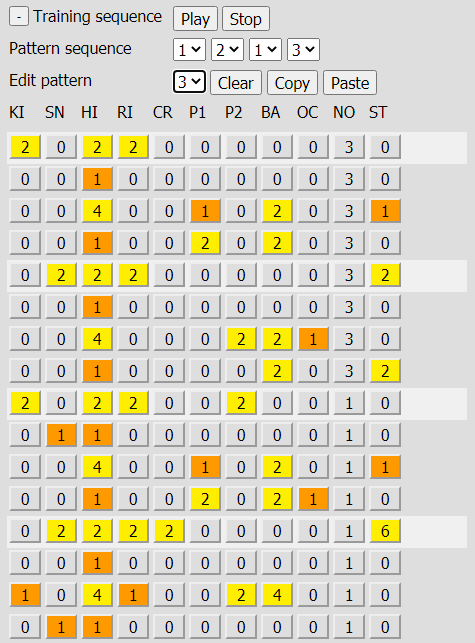

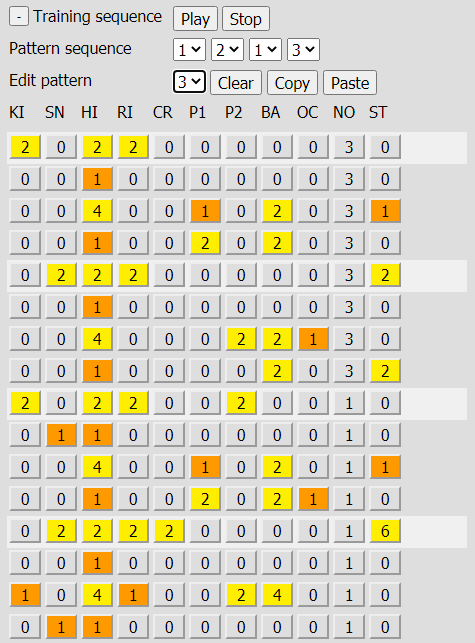

The current assumption is standard 4 / 4 musical notation. This translates into 4 beats per bar with 4 steps per beat so a pattern would be 16 steps. The user can create up to 4 of these patterns (called a ‘sequence’) and then train the network (using only this single training sequence).

This image shows a pattern in the interface (columns; KIck, SNare, HIhat, RIde, CRash, Percussion1, Percussion2, BAss, OCtave, NOte, STab), each row is one step;

For most columns; a ‘0’ (zero) is ‘nothing’, ‘1’ is ‘play normal’, ‘2’ is ‘play accent’.

The Bass column can go up to 16 where the odd numbers are normal play and even numbers are accents while the divider represents the duration of the note (so 12 would be an accent with a duration of 6 steps).

The note column represents the bass note within the ‘current’ chord interval (up to 4 notes).

The stab column just works similar to the bass column (combining accent & duration; notes cover the entire ‘current’ chord interval).

Because the number of features a single network column can learn is limited (~5) a pattern is split up into 3 SDRs (one SDR per pattern step for each instrument group).

An example set of SDRs for each of the 16 steps will look like this

Group 1 pattern (7*6);

(Kick, Snare, Hihat, Cymbals)

01: 0000111100000000110000000000000000000011

02: 1100001100000011000000110000000000000000

03: 1100001100000000000011110000000000000000

04: 1100001100000011000000110000000000000000

05: 1100000000110000110000000000000000110000

06: 1100001100000011000000110000000000000000

07: 1100001100000000000011110000000000000000

08: 1100001100000011000000110000000000000000

09: 0000111100000000110000000000000000110000

10: 1100001100000011000000110000000000000000

11: 1100001100000000000011110000000000000000

12: 1100001100000011000000110000000000000000

13: 1100000000110000110000000000000000110000

14: 1100001100000011000000110000000000000000

15: 1100001100000000000011000000110000000000

16: 1100001100000011000000110000000000000000

An extra ‘context’ SDR is added that represents the ‘musical structure’; it combines pattern number, beat and beat step into a single value. (I imagine this as the musical version of a grid cell output SDR).

In my HTM implementation I created what I call a ‘Merger’ which can combine or concatenate SDRs (and optionally subsamble and/or distort). So my network has 4 inputs; Context, Group1, Group2 and Group3. The first layer is a merger for each group that concatenates the context SDR with the input SDR for each group. The rest is fairly standard; Pooler, Memory and then a layer of classifiers for each pattern column (set to predict the ‘next’ step … in this implementation, the original pattern values are included in the SDRs so they can be associated with SDRs by the Classifiers)

A more detailed description of the network from the debug logging;

Inputs:

<- Context

<- Group1

<- Group2

<- Group3

Layer: 0

Group1Merger: MergerConfig: ?

<- 0 = Context

<- 1 = Group1

-> SDR: 24*24

Group2Merger: MergerConfig: ?

<- 0 = Context

<- 1 = Group2

-> SDR: 25*25

Group3Merger: MergerConfig: ?

<- 0 = Context

<- 1 = Group3

-> SDR: 24*24

Layer: 1

Group1Pooler: SpatialPoolerConfig: 24*24*1

<- 0 = Group1Merger/0

-> 0 = Active columns: 32*32, on bits: 20

Group2Pooler: SpatialPoolerConfig: 25*25*1

<- 0 = Group2Merger/0

-> 0 = Active columns: 32*32, on bits: 20

Group3Pooler: SpatialPoolerConfig: 24*24*1

<- 0 = Group3Merger/0

-> 0 = Active columns: 32*32, on bits: 20

Layer: 2

Group1Memory: TemporalMemoryConfig: 32*32*16

<- 0 = Group1Pooler/0

-> 0 = Active cells: 512*32

-> 1 = Bursting columns: 32*32

-> 2 = Predictive cells: 512*32

-> 3 = Winner cells: 512*32

Group2Memory: TemporalMemoryConfig: 32*32*16

<- 0 = Group2Pooler/0

-> 0 = Active cells: 512*32

-> 1 = Bursting columns: 32*32

-> 2 = Predictive cells: 512*32

-> 3 = Winner cells: 512*32

Group3Memory: TemporalMemoryConfig: 32*32*16

<- 0 = Group3Pooler/0

-> 0 = Active cells: 512*32

-> 1 = Bursting columns: 32*32

-> 2 = Predictive cells: 512*32

-> 3 = Winner cells: 512*32

Layer: 3

KickClassifier: ClassifierConfig: 512*32 (Kick)

<- 0 = Group1Memory/0

<- 1 = Group1

-> 0 = Classifications: 1*1

SnareClassifier: ClassifierConfig: 512*32 (Snare)

<- 0 = Group1Memory/0

<- 1 = Group1

-> 0 = Classifications: 1*1

HihatClassifier: ClassifierConfig: 512*32 (Hihat)

<- 0 = Group1Memory/0

<- 1 = Group1

-> 0 = Classifications: 1*1

RideClassifier: ClassifierConfig: 512*32 (Ride)

<- 0 = Group1Memory/0

<- 1 = Group1

-> 0 = Classifications: 1*1

CrashClassifier: ClassifierConfig: 512*32 (Crash)

<- 0 = Group1Memory/0

<- 1 = Group1

-> 0 = Classifications: 1*1

Percussion1Classifier: ClassifierConfig: 512*32 (Percussion1)

<- 0 = Group2Memory/0

<- 1 = Group2

-> 0 = Classifications: 1*1

Percussion2Classifier: ClassifierConfig: 512*32 (Percussion2)

<- 0 = Group2Memory/0

<- 1 = Group2

-> 0 = Classifications: 1*1

BassClassifier: ClassifierConfig: 512*32 (Bass)

<- 0 = Group2Memory/0

<- 1 = Group2

-> 0 = Classifications: 1*1

OctaveClassifier: ClassifierConfig: 512*32 (Octave)

<- 0 = Group2Memory/0

<- 1 = Group2

-> 0 = Classifications: 1*1

NoteClassifier: ClassifierConfig: 512*32 (Note)

<- 0 = Group2Memory/0

<- 1 = Group2

-> 0 = Classifications: 1*1

StabClassifier: ClassifierConfig: 512*32 (Stab)

<- 0 = Group3Memory/0

<- 1 = Group3

-> 0 = Classifications: 1*1

Training consists of repeatedly feeding the 4 input patterns, in sequence to the network (about 16 - 24 cycles or when the classifiers reach +99% accuracy on average over 256 steps). First 4 cycles, only spatial pooler learning, after a total of 8 cycles temporal memory is added and after a total 12 cycles the classifiers are added. More cycles can be trained if the classifiers do not reach 99% accuracy.

(Network resets are not used; not in training and not for pattern generation; segment creation subsampling is used to smooth out bursting)

Generating a new sequence of 4 patterns using the trained network starts by assuming the last prediction of the last training cycle is correct, generating a context SDR for the next step and then feeding that to the network to generate the next step (and repeat). ‘Creativity’ can be injected here by tweaking the distortion of the mergers in the network and/or distorting the generated context SDR (creating time signature and/or feedback distortion). These ‘creativity’ settings can be configured in the application under ‘Generators’. (I kind of imagine this is what happens when we dream, hence the name ‘MidiDreamer’)

As soon as a generated sequence is played, the network will be used to generate a new sequence for the generator that created it. That way, the user always has a fresh sequence available to play next, even if they decide to change it right before the automatic switch. (By default, the sequencer just cycles through the generators continuously).

I hope this helps, please let me know if you have more questions!

)

)