This is an extension of the HTM model to include apical dendrites. The function of apical dendrites in pyramidal neurons is to implement the global neuronal workspace theory.

Results

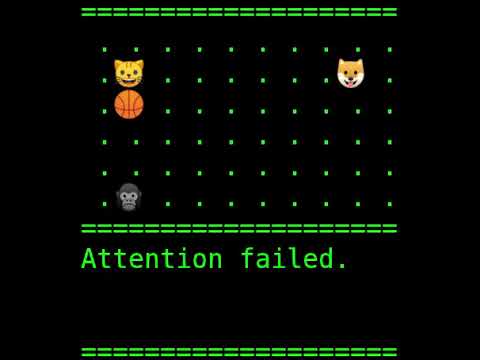

To demonstrate the model in action, I will show that it can pass a selective attention test. The canonical selective attention test involves observing a group of people moving around. The viewer is directed to focus on some of the people (and implicitly told to ignore all others). It demonstrates our ability to pay attention and to ignore distractions. You can watch the video at: selective attention test - YouTube

I demonstrate that the apical dendrites are capable of solving the selective attention test. The RED areas indicate what the model is paying attention to.

I implemented the model in Python using the HTM.Core library. It’s source code is available at GitHub - ctrl-z-9000-times/apical_dendrites: Selective Attention Test.

Model

Begin with a model of a pyramidal neuron. There are several algorithms which can plausibly mimic a neuron, such as the Spatial Pooler or Temporal Memory algorithms. For this demonstration I used an encoder.

A new SDR is introduced to represent the global workspace, and each pyramidal neuron has a set of apical dendrites which function identically to the dendrites of a temporal memory except that they sample inputs from the global workspace SDR. Synaptic input to the soma and basal dendrites can cause neurons to activate, but neurons only participate in the global workspace SDR if they are both active and their apical dendrites are in a predictive state. Apical dendrites learn when the neuron activates (at any level).

The model is trained by promoting random objects into the global workspace. This means finding a group of neurons which are locally active but not active in the global workspace, and forcing the neurons into the workspace SDR. When an object is first promoted to global attention the attention will immediately fail, and after each failure the apical dendrites will learn to keep the object in the workspace, for next time. When the model detects that there is not activity in the global workspace SDR, it promotes a new random object into it.

References

Larkum 2013. https://www.projekte.hu-berlin.de/en/larkum/research/larkum_tins_2013

This article explains the neural mechanisms inside of the apical dendrites.

S. Dehaene and Y. Christen (eds.), Characterizing Consciousness: From Cognition to the Clinic? Research and Perspectives in Neurosciences, DOI 10.1007/978-3-642-18015-6_4, # Springer-Verlag Berlin Heidelberg 2011

“The project of relating subjective reports of conscious perception to objective behavioral and neuroscientific findings is now under way in many laboratories throughout the world. The [Global Neuronal Workspace (GNW)] hypothesis provides one possible coherent framework within which these disparate observations can be integrated.”

“According to the GNW hypothesis, conscious access proceeds in two successive phases (see also Chun and Potter 1995; Lamme and Roelfsema 2000). In a first phase, lasting from ~100 to ~300 ms, the stimulus climbs up the cortical hierarchy of processors in a primarily bottom–up and non-conscious manner. In a second phase, if the stimulus is selected for its adequacy to current goals and attention state, it is amplified in a top–down manner and becomes maintained by sustained activity of a fraction of GNW neurons, the rest being inhibited. The entire workspace is globally interconnected in such a way that only one such conscious representation can be active at any given time (see Sergent et al. 2005; Sigman and Dehaene 2005, 2008). This all-or-none invasive property distinguishes it from peripheral processors in which, due to local patterns of connections, several representations with different formats may coexist. Simulations, further detailed below, indicate that the late global phase is characterized by several unique features. These predicted “signatures” of conscious access include a sudden, late and sustained firing in GNW neurons [referred to as conscious “ignition” in Dehaene et al. (2003)], a late sensory amplification in relevant processorneurons, and an increase in high-frequency oscillations and long distance phase synchrony.”

“The state of activation of GNW neurons is assumed to be globally regulated by vigilance signals from the ascending reticular activating system [which is located in the brainstem] that are powerful enough to control major transitions between the awake state (GNW active) and slow-wave sleep (GNW inactive) states. In the resting awake state, the brain is the seat of an important ongoing metabolic activity (Gusnard and Raichle 2001). An important statement of the GNW model is that the GNW network is the seat of a particular kind of brain-scale activity state characterized by spontaneous “ignitions” similar to those that can be elicited by external stimuli, but occurring endogenously (Dehaene and Changeux 2005). A representation that has invaded the workspace may remain active in an autonomous manner and resist changes in peripheral activity. If it is negatively evaluated, or if attention fails, it may, however, be spontaneously and randomly replaced by another discrete combination of workspace neurons, thus implementing an active “generator of diversity” that constantly projects and tests hypotheses on the outside world (Dehaene and Changeux 1989, 1991, 1997). The dynamics of workspace neuron activity is thus characterized by a constant flow of individual coherent episodes of variable duration, selected by specialized reward processors.”