I was working on simple and flexible method for parametric density estimation: using density being just linear combination of chosen functions. It turns out that using orthonormal family, L2 optimization says that optimal coefficient is just overage over the sample of given function:

rho(x) = sum_f a_f f(x)

for

a_f = average of f(x) over the sample

It is very convenient for estimating multidimensional densities - we can cheaply and independently model chosen types of correlations between variables:

- correlations for C subset of variables allow to conclude about any of these variables from the remaining |C|-1 (correlations allow to imply in any direction),

- for each such C we additionally have freedom of choosing basis: e.g. first coefficient (j=(1,1)) denotes that growing given variable, grows or shrinks the second variable. Second coefficient (j=(1,2)) analogously: concentrates or spreads the second variable etc.

- finally they create complete basis: for large sample they could theoretically recreate exact joint probability distribution the sample comes from.

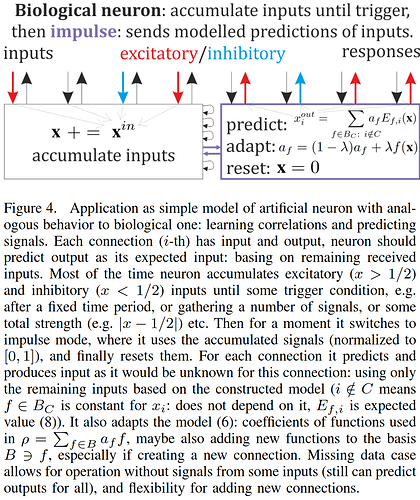

I thought it would be perfect for realization of artificial neuron - which models correlations between inputs and try to predict them, e.g.:

It was thought for high order models for dense signals, but can be also adapted for sparse signals like in HTM.

Arxiv: https://arxiv.org/pdf/1804.06218

This simple and flexible approach seems completely unknown(?), if it looks promising for artificial neurons (?), I would gladly help adapting it.

ps. Simple Mathematica interactive demo for the basic method: http://demonstrations.wolfram.com/ParametricDensityEstimationUsingPolynomialsAndFourierSeries/