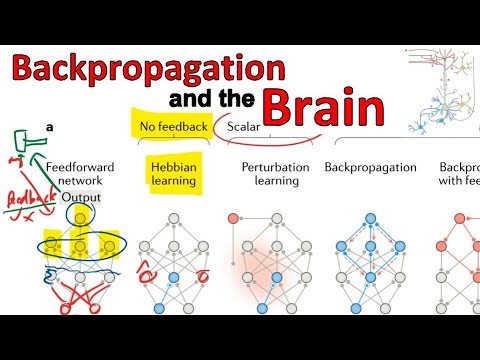

So, I found out about this paper where they are proposing a theory for how the brain may be implementing an approximation to backprop, I personally like this theory a lot.

They propose that instead of propagating gradients, the brain uses a stack of autoencoders, with forward and reconstruction weights, when it gets a supervision signal, it sends the desired output through the reconstruction weights to get desired activity estimates and trains the forward weights to produce that activity locally in each layer.

They call it “target activity propagation”

I like this because it sounds implementable with sparse synapses and activations.

the paper seems to be paywalled, I managed to find a pdf online. but in case if you’re not into pdf egg-hunt this video explains it quite well.

https://www.nature.com/articles/s41583-020-0277-3/

2 Likes

I’m not very convinced by this.

Even if it is true, considering the sample efficiency of the brain vs deep networks, it means deep learning does something really wrong. Yet it isn’t backpropagation the culprit but some other thing(s) they do with it.

1 Like

The interesting bit is that you cant backpropagate through binary sparse networks easily but target propagation seems to be built for that.

You sumarized my thoughts.

1 Like

A dense neural network layer requires nn weights per layer where n is the width of the network.

If you could knit together multiple small width neural networks into a layer then the number of weights would be much smaller c(small n * small n) and you might call that sparse.

A normal layer of width 256 would need 65536 weights.

A composit layer might need say 16, width 16 subunits. 16*(16*16)=4096 weights.

And you can actually do the knitting with the Walsh Hadamard transform:

1 Like