It’s a crucial question to ask for anybody who is totally and completely invested in modern-day deep learning, for sure. My problem is that even assuming the existence of a common cortical circuit in the neocortex, the brain consists of a myriad of highly coupled unique functional and structural pieces that nearly all interact and thus at least influence each other’s function, if not, are inseparable from them. What exactly does Hinton mean to suggest? Does he mean to say cortex could be doing backprop? Where in cortex, exactly? That is to say, where in the cortical column and it’s connections to other brain regions does he suggest it is happening? Does it arise through a combination of structures? The number of possible manifestations of such a claim seem unwieldy at best.

Or is he suggesting every single neuron in the brain is constantly doing this brand of spike-frequency backprop? In that case, what about the many different functional and structural types of neurons in the CNS? Does that change anything? Also, not all neurons change their spike frequency in the same way. For instance, thalamic relay neurons have a special capability of shifting their mode of firing between tonic and bursting as a property of their t-type calcium channels. This switching is orchestrated by other components in the thalamocortical system like the thalamic reticulate nucleus providing inhibitory input to the relay neurons which hyperpolarizes them and causes the inactivation gate of the t-type calcium channels to open thus switching the firing mode from tonic to bursting. In contrast, the brainstem modulatory inputs read acetylcholine which depolarizes the relay neurons and has the opposite effect. Input from cortex and it’s effect on the mode of firing in relay neurons is more complicated and I’ll save the long explanation but through processes known as facilitation and synaptic depression the switch of firing modes is believed to modulate the degree of detail and manner in which information from the cortex ought to be relayed (tonic firing to express a linear relationship with firing frequency and thus signal strength and bursting which loses this signal strength information but helps strongly reinforce relevant synapses quickly). I find it very unlikely that a topic as multi-form and complex as synaptic firing rate modulation has an answer as simple as “because backprop…backprop everywhere.”

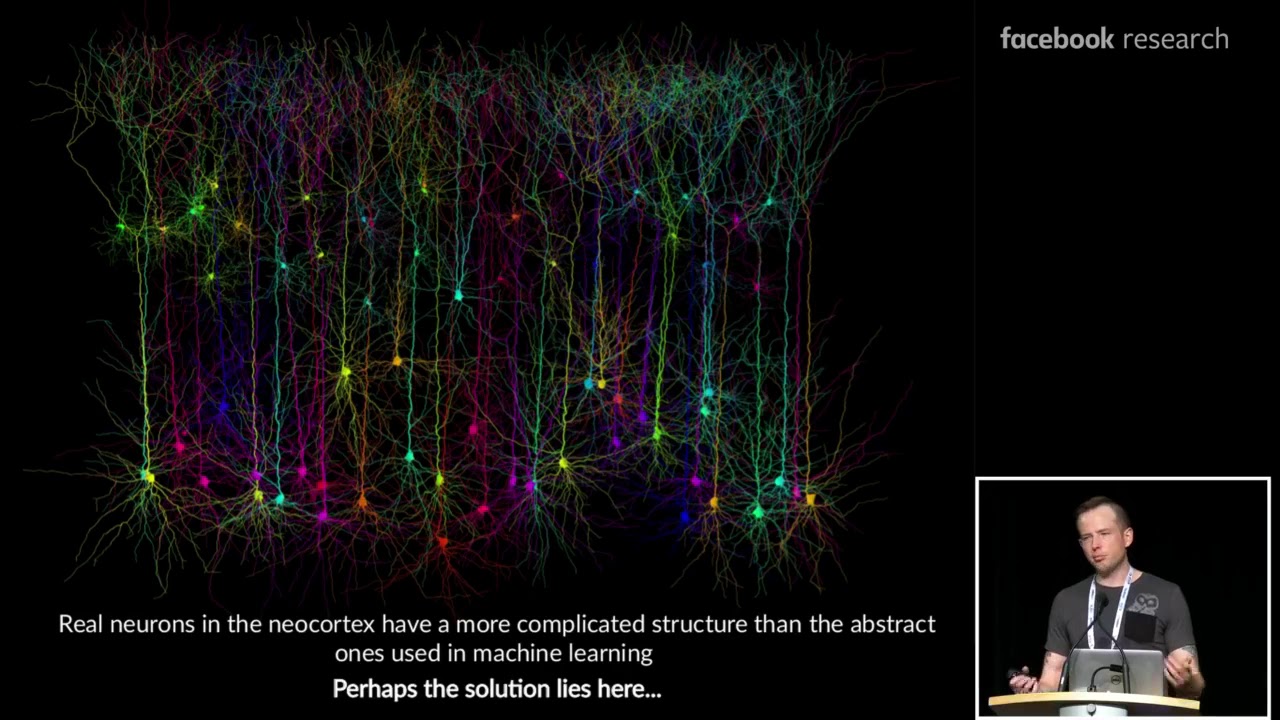

My problem with ANNs and their relationship to neuroscience is that they don’t actually have one. It seems to me the only detail that links ANNs to neuroscience is an extremely high-level concept of distinct computational units connecting to other distinct computational units that pass signals of some kind to each other. No further connection to neuroscience exists whether in the details of the computational units themselves or in the architecture of their connections. So forgive me if I find searching for backprop in the brain to be almost silly. Not to mention, there are many aspects of HTM that are also lacking (or even inconsistent) with neuroscience literature. Most obviously, neocortex comes in 6 layers…standard HTM networks have ventured to explain perhaps the function of layers 2/3. Layer 4 typically receives input from the thalamus and layers 5 and 6 are widely known to project back to the thalamus and other sub-cortical structures; this functionality cannot be ignored. Moreover, HTM has yet to explain layer 1 which is arguably inseparable from the idea of multiple, distinct cortical areas of the same “brain” unit talking to each other (likely in a hierarchical processing fashion) which has yet to be realized to my knowledge. Different modes of firing are also not modeled in HTM networks. In fact, the whole temporal component of neuron firing rates are ignored, to my knowledge. An HTM neuron has either “fired” or “not fired” for a timestep and that information is not carried to the next timestep with regards to whether it should or should not be fired in the next timestep. Boosting could potentially make my statement false, but that is in an effort to implement homeostatic excitability control and not realistic temporal firing characteristics, either way

ANNs of any modern type perform a single function, defined by labeled data, which they approximate through nonlinear optimization techniques (backprop). Every “neuron” in the ANN has dedicated all it’s representational and computational resources to this function. It makes sense in such an environment that a comprehensible error signal can be generated and used when your model has a singular, crystal clear goal in mind (curve fitting). In my experience, it is never so clear-cut in the brain. As I mentioned before, structures in the brain in general have connections going in many directions and do all kinds of different things simultaneously. Goal-driven learning akin to backprop doesn’t make sense to me at this low of a level considering the possible breadth of different purposes to which each multi-polar neuron contributes in general. In HTM, each dendritic branch is believed to be an independent pattern detector. HTM neurons (and real life multi-polar neurons) are associated with a large number of dendriric branches thus potentially recognize a large number of different patterns. It stands a chance in the end because of large, sparse pattern encoding. Biological (and HTM) neurons work together, but they do so independently of one another. In contrast, the characteristics (weights) of ANN neurons are dependent on the characteristics of every other neuron in the ANN that come before it. If you flip a weight in an ANN then it will impact the function of every neuron it talks to, which will impact the function of the neurons those talk to, cascading forward. The whole entire ANN has been decided with regards to the single optimization function. To my knowledge, there is no evidence in neuroscience of such a global supervisory influence (perhaps whose purpose is to perform some kind of optimization) on synaptic plasticity and the existence of one is not consistent with the concept of neurons acting independently.