I always though that models should be hierarchically segmented, but currently this is implemented as MoE, which is supervised. So this chat with GPT is about implementing it via clustering. Apparently people tried it with centroid clustering, but not with connectivity clustering, as I think it should be.

Does it make sense, @neel_g?

ChatGPT

Deep embedded clustering models are an area of research in machine learning that focus on combining representation learning with clustering, typically in an unsupervised manner. Here are some insights and resources on the topic:

- The Deep Embedded Clustering (DEC) method is proposed as a way to learn feature representations and cluster assignments simultaneously using deep neural networks. It addresses the need for learning representations that are suitable for clustering analysis.

- Deep Embedded K-Means Clustering has been noted for gaining momentum, leveraging the representational power of deep neural networks, such as autoencoders, to enhance the clustering process. The synergy between good representations and effective clustering is emphasized, where each aspect reinforces the other.

- Contrastive deep embedded clustering is highlighted as a popular method in unsupervised learning due to its performance in data-mining applications. Models like Stacked Autoencoders are used to map data from the original space to a non-linear latent space, facilitating deep clustering.

- An example is given where deep clustering models often employ autoencoders to assist traditional clustering algorithms, such as in the DEC algorithm and the Improved Deep Embedded Clustering (IDEC) algorithm. Autoencoders are used for pre-training before clustering is performed.

- A novel DEC model, referred to as deep embedded clustering with cluster-level representation learning (DECCRL), focuses on learning global representations necessary to capture the overall data structure at the cluster level.

- Finally, deep embedded clustering models are described as being able to jointly optimize node clustering and representations. The DEC method is mentioned as a pioneer in this field, using an autoencoder to learn representation vectors and a clustering loss to obtain clusters.

These resources suggest that there are several models and methods within the realm of deep embedded clustering, each with its own approach to learning representations and performing clustering, often using deep neural networks and autoencoders as fundamental components.

![]()

This sounds like a mixture of experts?

ChatGPT

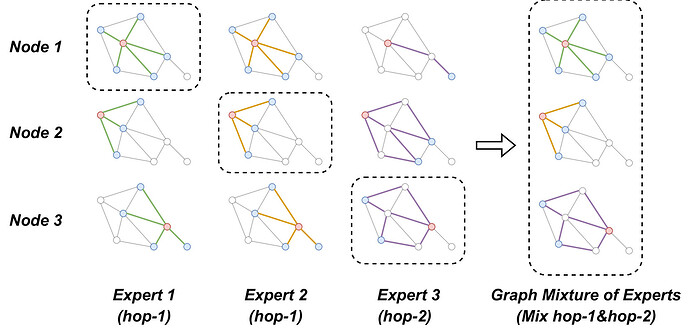

A Mixture of Experts (MoE) model and deep embedded clustering share the common theme of using multiple components to handle different parts of the data, but they are designed for different purposes.

- Mixture of Experts (MoE): This is a model architecture that involves a gating network and a set of expert networks. The gating network decides how much each expert contributes to the final output based on the input data. Each expert is trained to handle different regions of the input space, and the final prediction is a weighted combination of the outputs from all experts. MoE is used for supervised learning tasks.

- Deep Embedded Clustering: This approach is generally used in unsupervised learning, where the goal is to learn feature representations that are conducive to clustering. The models often use deep neural networks to project data into a latent space where it is easier to define clusters. Unlike MoE, deep embedded clustering doesn’t usually involve a gating mechanism to weigh the contribution of different “experts” (in this case, clusters or components of the model). Instead, the model learns a representation that naturally segments the data into clusters.

While MoE models are a form of ensemble learning with multiple specialized models (experts) operating under a gating mechanism, deep embedded clustering focuses on learning a single representation space that reflects the inherent structure of the data, aiming to segment the data into meaningful clusters without supervision.

![]()

MoE doesn’t have to be supervised, gating network can be a cluster, and subsequent retraining can be segmented too?

ChatGPT

You’re correct that the Mixture of Experts (MoE) model does not inherently require supervision. The MoE architecture can indeed be adapted for unsupervised learning scenarios:

- Unsupervised MoE: In this setup, the gating network could function similarly to a clustering algorithm, determining which ‘expert’ should handle a given input based on the learned cluster assignments rather than labels.

- Clustering as Gating: If the gating network operates by clustering, each ‘expert’ would effectively become responsible for the data points associated with a given cluster. The experts could then further process or analyze the data points within their assigned cluster.

- Segmented Retraining: Following the initial clustering by the gating network, each expert could be retrained or fine-tuned on their respective segment of the data. This can be an iterative process, with the gating network and experts being refined over time to better model the data distribution.

In this way, the experts are specialized to different subsets of the data, determined by the gating network’s clustering, and the overall architecture can learn a form of hierarchical clustering where each cluster has a specialized model (expert) associated with it. This allows the model to capture complex structures and relationships in the data by leveraging the diversity of the experts.

![]()

Has this been implemented?

ChatGPT

The concept of unsupervised or semi-supervised Mixture of Experts (MoE) is not mainstream, but it aligns with ongoing research in areas that combine clustering and representation learning. Researchers are continuously exploring hybrid models that integrate principles from different architectures to leverage their strengths for complex tasks, including unsupervised learning.

To my knowledge as of the last update in April 2023, while there may not be a widely recognized standard implementation of a purely unsupervised MoE model that uses clustering as a gating mechanism, the idea has conceptual merit and could exist in specialized applications or as part of research that has not yet become mainstream. It would represent an interesting intersection of clustering algorithms with the MoE framework, potentially leading to novel approaches in unsupervised learning.

![]()

This is using centroid clustering, has it been done with connectivity clustering?

ChatGPT

To date, the implementation of Mixture of Experts (MoE) models that utilize connectivity-based clustering methods, like hierarchical clustering or minimum spanning tree clustering, has not been widely reported in mainstream machine learning literature. The majority of MoE models and deep embedding clustering methods typically employ centroid-based approaches, like k-means or Gaussian mixtures, because they are more straightforward to integrate with the backpropagation algorithm used to train neural networks.

Connectivity-based clustering methods focus on the proximity or connectedness of data points, forming clusters based on their distance from each other, which can reveal complex structures in the data. These methods, while powerful, are computationally intensive and less straightforward to combine with gradient-based learning algorithms typically used in neural networks.

However, the concept of using connectivity-based clustering within a Mixture of Experts framework is intriguing and could potentially lead to novel algorithms that capture data relationships in a more nuanced manner. If such an approach has been explored, it would likely be within the domain of advanced research projects or specialized applications. For concrete implementations or studies, one would have to look into the latest research papers or experimental studies in machine learning, possibly at the intersection of neural network design and complex network analysis.