You and I are very close together on this.

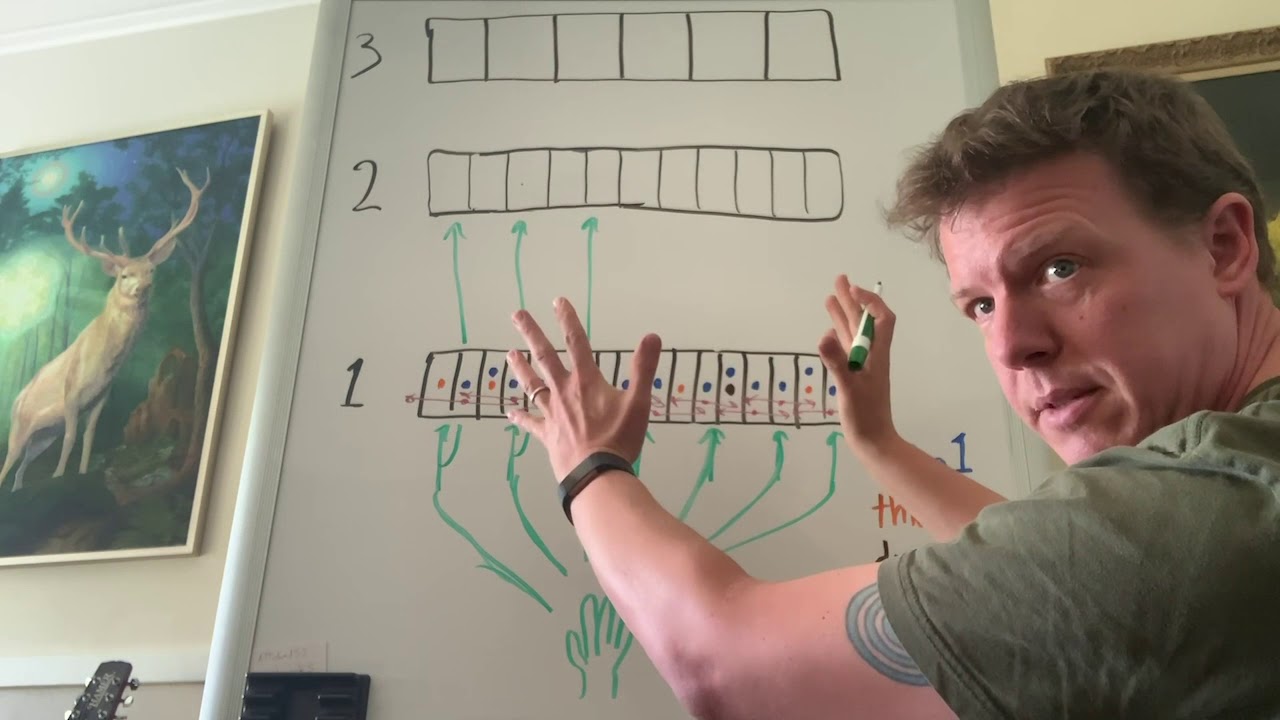

I see a lot of what I am thinking on your visualization video above. I won’t comment on level 1 and 2 - only on level 3 in your whiteboard sketch.

Yes, the great sea of mini-columns are all matching to a greater or lessor degree. a constant white noise of activity as the buzzing, blooming, moving world roars on around us.

We know that the SDRs are like a key that fits a given (learned) lock. The thing is - random patterns that are close to what was learned with those keys and partial matches are occurring a lot - there are constant “almost” hits everywhere in the sensory fields; weak depolarizations that are not enough to trigger firing by themselves.

You allude to lateral connections helping to recognize that the sensed object is part of a a larger pattern.

Lets work through this; of all the partial hits between pairs of mini-columns - some add, some cancel and white noise of matches essentially create some constant low level of activation. This is met with a tonic balance of inhibitory inter-neurons to prevent all the excitatory pyramidal cells from firing at once.

But - for some sub-set of mini-column - they are ALL hitting on the pattern because they have learned this before. Due to the nature of the lateral connections - about 300 to 500 um spacing - trios of mini-columns are mutually reinforcing and getting stronger in a voting system. (More on this in a minute)

Just for fun - say it take at least 3 mutually reinforcing links to be strong enough (or a VERY strong local match) to start to over-ride the inhibitory inter-neurons. These reciprocal connections give these neurons an “unfair advantage” over their lesser neighbors that are not seeing something they know. This stronger ON signal pushes these neurons to more strongly trigger the attached inhibitory OFF inter-neurons. There is still balance but the balance has shifted from weak white noise distributed across all mini-columns to the much stronger hex-grid pattern that is forming; I will guess that the overall level of activity may well remain constant.

As these strongly excited mini-columns work, just as you suggested in your video, they influence any mini-columns that are on the fence to join in the pattern. This spreads across the extent of the perceiving mini-columns like wildfire sweeping through dry timber. As these mutually resonate they are triggering the adjacent inhibitory inter-neurons suppressing the weakly responding background noise, leaving only the strongly resonating hex standing alone to signal the grid pattern to distant maps and back down the hierarchy. These are the ones that have learned the pattern we are perceiving now.

Back to that voting system - note that the column are active on the alpha rate - about 10 Hz. Many different lines point to that being the basic column processing rate.

I posit that the voting to select the winners in the voting/suppression system is running at the gamma rate - about 40 Hz. This allows 4 rounds of reinforcement/suppression for each alpha cycle. The strong get stronger, and the weakly responding get voted off the island. (lame - but I had to say it!)

The cool thing here is that the output is exactly the hex pattern that is observed in nature - a stable output pattern that signals some recognized input pattern. Remember - this is not a goal - it is a happy side-effect of the fixed sized lateral connections.

A huge and very important detail: this is the long sought pattern-2-pattern behavior that the deep learning people have been lording over HTM for so long. Some input pattern/sequence is turned into some unique and repeatable output pattern. This is formed without back-prop, without a teacher, with a small number of presentation. We can do it too!

This still allows the sequential recognition to function - it is just a whole patch of mini-columns blinking along in synchronization stepping through a 2D pattern rather than isolated twinkle lights. All yoked together like a team of horses.

Note that even as the input field steps though its sequence of this learned 2D pattern the output hex-grid code stays stable as this set of individual mini-columns are all going through the pattern that they have learned.

Over time they will add slight variations like different viewing angles as the central pattern enlists other nearby mini-columns to sing along when the main pattern is playing.

This is the mechanism I propose that binds sequential saccades into perceived visual objects. Since the entire cortex depends on motion for recognition, this should work as the highest level in all sensory hierarchies.

I am working on a post to show this now. I still have several images to make and a more detailed explanation to convey this in an approachable way.