Hi Guys,

I’m trying to understand the Learning Relative Landmark Locations Poster but some of the things are not clear to me. So I’d like to ask couple of questions - maybe someone here has the answers.

1, How exactly did this test work - I.e. currently I imagine there was a feature sensor moving randomly across the 16 locations where the features were encoded as SDR and fed to the input layer (and the grid cell layer was driven by both “input layer” and “motor” layer somehow). Correct or not?

2, Grid module drivers - in the section “Network model” there is an overview of the network model. The only thing that is not clear to me is how the grid module is driven. I.e. I see green arrows coming from “input layer” and from “motor” but I’d like to understand how exactly it’s implemented. I’m imagining that what this means is that for a proximal dendrite of a cell in the grid layer half of the synapses have inputs coming from “input layer” and the other half of the synapses has input coming from the “motor” layer. Is this correct or how exactly does this work?

3, Environments and landmarks - In the “Results” section there is this sentence:

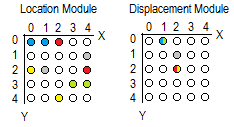

Network trained on 1000 environments, each with 16 locations containing random landmarks from a pool of 5 unique landmarks

On the picture in the poster I see that the environment has 4x4 grid, each field labeled with a letter. But what exactly is meant by random landmark?

4, Displacement layer - could you explain how exactly the displacement layer works (best on an example of a room used in this poster). Here too, if I try to imagine a neuron and it’s proximal dendrite and how the grid cells are projecting onto this dendrite - I’m lost.

I think I rougly get the point of this poster but as I would like to implement this in my own code and play with it a bit, I would like to understand the details. But then again, maybe I don’t understand it at all…

Thank you in advance for your time.

MH