Hello! I’m doing my CS bachelor thesis this year and I really wanted to do something on HTM. However, differently from the thread from 2 days ago, I’m not looking for a ambitious topic to work, since I’m a bit late for it. My current idea is to evaluate HTM on branch prediction (https://en.wikipedia.org/wiki/Branch_predictor). What do you think about this topic? Which other same level topics would you recommend me to look into? Thanks a lot.

I think it looks interesting. What kind of state information is typically accessible from a point of prediction? I ask because TM will give you the ability to find temporal patterns across the data accessible here. I imagine you can treat one step through a computation like any other step. The hard thing will be figuring out what state data will contribute to a prediction.

First thing I’ve thought is using all instruction addresses to feed the model. Then, when a branch instruction in reached, it would ask the model for the next address. One modification could be feeding the model only with addresses involved on branching to make it more efficient. Other way it could be done is using history data from each branch instruction.

I think I can find more inspiration looking into papers like this one: http://cseweb.ucsd.edu/~atsmith/rnn_branch.pdf

Let me present this in a different way. If you were the a human analyst and you had access to all the data in one of these time steps, how would you attempt to extract meaning from the present state? If you cannot answer this question, it will be very hard to solve this problem with a brute force “feed it all the data” approach with HTM.

I think there is more to it than that. If programs have some repetitive characteristics that are not obvious to a human analyst, they could still be detectable by an HTM system. In a way a program full of branches could be compared to heating data in a gym.

I’ve read a good answer here, so I’ll cite it.

(It makes an analogy with a train in a railroad junction)

"So how would you strategically guess to minimize the number of times that the train must back up and go down the other path? You look at the past history! If the train goes left 99% of the time, then you guess left. If it alternates, then you alternate your guesses. If it goes one way every 3 times, you guess the same…

In other words, you try to identify a pattern and follow it. This is more or less how branch predictors work.

Most applications have well-behaved branches. So modern branch predictors will typically achieve >90% hit rates. But when faced with unpredictable branches with no recognizable patterns, branch predictors are virtually useless."

That’s what I thought, Falco, I’m curious on the patterns an HTM system can spot with a lot of data, even though it might not be practical to implement.

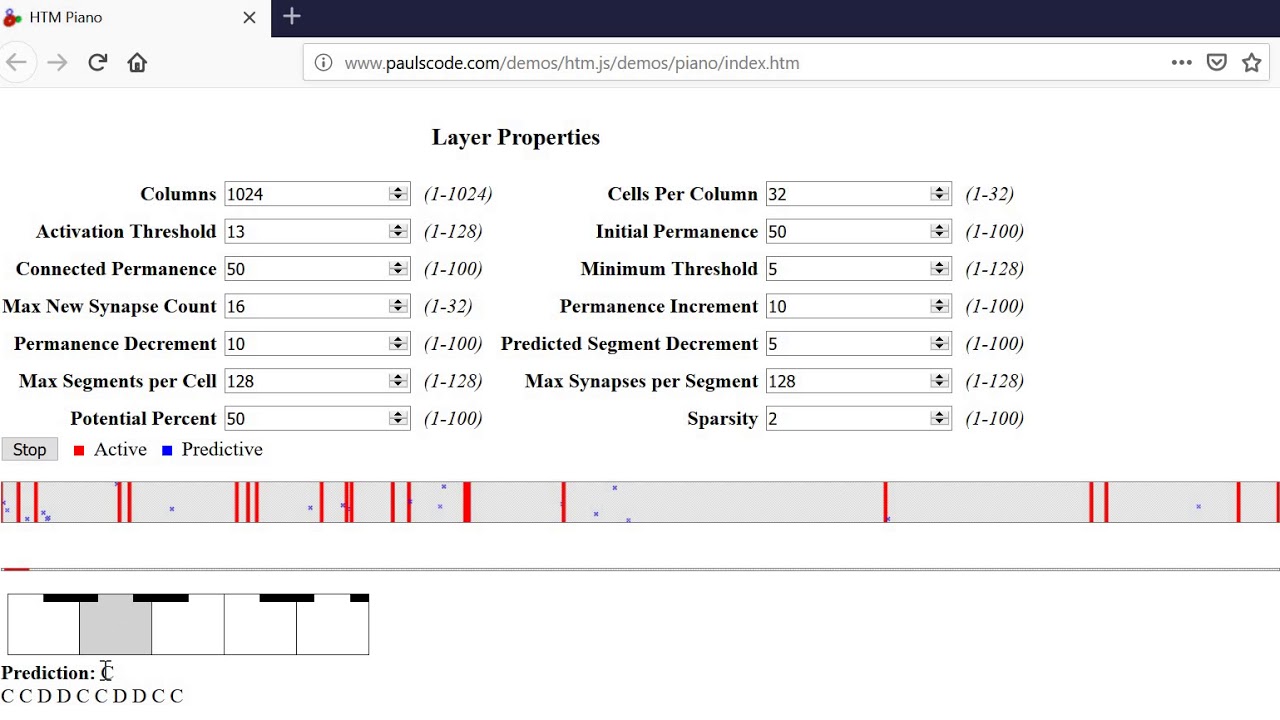

Is this a proper visualization of the problem space?

(this is something completely different isn’t it?)

I think a decision tree could be used to solve it. I’ll try to do an example using history data for branch instructions:

Consider a branch that follows the pattern 3 x “jump to a given address”, 1 x “do not jump (i.e. goes to the following address)” for a considerable amount of time. For simplicity, let’s represent it as “1110”. Let’s also consider we store the history from the last 8 times this branch executed and that we want to use this data to predict if the jump will occur. Then, a decision tree should map the following states:

11101110 -> 1

11011101 -> 1

10111011 -> 1

01110111 -> 0

Based on some HTM School videos I’ve watched (btw, you’re doing a fantastic job!) and rock, paper, scissors results, I think a Temporal Memory would learn this sequence very quickly, and should also adapt quicky when the pattern changes.

@Daniel_MK I get your point of using a TM to learn the beaching pattern. Sounds interesting. and might work! I believe AMD is doing something like this but with a regular perceptron. How to execute TM in a few cycles (or even one) might prove itself to be quite a challenge (an taking up a lot of area).

May you keep me posted of your progress? It sounds super interesting and I’m overly hyped now!

A potential problem have just came up in my mind. TM doesn’t perform too well when there is only 2 possible input/outputs. The permanence_increment and permanence_decrement simply cancel each other out if both outcome are equally likely. Which might not effect most cases. But might be a problem when dealing with a branch switching constantly. (Ex: Teach a TM to learn the XOR problem and… it doesn’t work without sending a bunch of 000, 101s into the TM beforehand.)

So a sequence of 0111011101110111 can be learned, but 0101010101 or 001100110011 might need some work.

Yeah, I was surprised to read the following on Wikipedia: “Most of the state-of-the-art branch predictors are using a perceptron predictor (see Intel’s “Championship Branch Prediction Competition”). Intel already implements this idea in one of the IA-64’s simulators (2003). The AMD Ryzen processor includes a neural branch predictor.” (https://en.wikipedia.org/wiki/Branch_predictor#Neural_branch_prediction).

I still don’t know the inner workings of a TM, but I’m aware on the problem of implementing it to be quick enough to be valuable as a branch predictor. That’s not a problem I’m willing to tackle, though. If it works well, luckily somebody can solve it one day. One solution I thought about is to look ahead on instructions and predict the branch while the processor is working on non-branch instructions. A cheaper predictor could also be used in parallel to address cases in which the TM doesn’t have the necessary time to compute. Well, just some ideas.

I will definitely keep you updated on my progress! I’m glad to know you’re interested.

I guess that’s an important problem I need to consider, thank you for pointing it out. I will try to elaborate a better example with real code and analyse both local branch history (same as the last example) and global history (my first idea, just feeding the TM with all instructions involved in branching). I’ll post it as soon as I manage to do it in the right way. Stay tuned haha

These sequences should all be easy for HTM to learn. For simplicity, I’m assuming the encoding for 0 does not share semantics with the encoding for 1. I’m also assuming the parameters are set such that a transition can be learned in a single shot, and that permanence decrement isn’t so high that transitions are forgotten in a single shot. There is also a subtle implementation detail about whether you allow predictions in the same timestep that a new transition is learned or in the next timestep, but doesn’t change the analysis much.

0111011101110111

- Minicolumns for 0 burst, and cells for 0’ are selected

- Minicolumns for 1 burst, and cells for 1’ are selected, connecting to 0’

- Minicolumns for 1 burst, and cells for 1’’ are selected, connecting to 1’

- Minicolumns for 1 burst, and cells for 1’‘’ are selected, connecting to 1’‘. 1’’ is predicted

- Minicolumns for 0 burst, and cells for 0’’ are selected, connecting to 1’‘’. 1’ is predicted

- Pattern is now learned. Most future inputs will be correctly predicted, with periodic single timesteps that burst (due to the “repeating inputs” problem that HTM hasn’t yet solved). These will space out further over time

0101010101

- Minicolumns for 0 burst, and cells for 0’ are selected

- Minicolumns for 1 burst, and cells for 1’ are selected, connecting to 0’

- Minicolumns for 0 burst, and cells for 0’’ are selected, connecting to 1’. 1’ is predicted

- Pattern is now learned (with the “repeating inputs” problem)

001100110011

- Minicolumns for 0 burst, and cells for 0’ are selected

- Minicolumns for 0 burst, and cells for 0’’ are selected, connecting to 0’

- Minicolumns for 1 burst, and cells for 1’ are selected, connecting to 0’’

- Minicolumns for 1 burst, and cells for 1’’ are selected, connecting to 1’

- Minicolumns for 0 burst, and cells for 0’‘’ are selected. 0’’ is predicted

- Pattern is now learned (with the “repeating inputs” problem)

What is the a priori knowledge regarding your example that is using the 1 and 0 as inputs to HTM? Is there any theory or even just a hypothesis that the history of 1 and 0 is enough to tell the future of the branching decisions? By intuition I say yes, the reason being core code in an operating system repeat themselves (this is one thoery). But the most important question is what would be the theoretical probability that a pattern will emerge? How about in practice, does a pattern emerge after a few minutes, hours, days, or etc? This a priori knowledge even when it’s just a theory/hypothesis is very important so that one can take advantage of using the HTM. TM will only learn a pattern, if there is no pattern or if its capacity is not enough to “wait” for a pattern to emerge, then it will not be very useful.

Yes, TM can predict the sequence 001100110011… given the right connections. But in reality TM can’t learn them. Consider this example teaching a TM the sequence 00110011…

Ideally TM should learn and predict the sequence. But if you run it. It predicts nothing constantly.

None

None

None

None

None

None

None

None

None

None

None

None

None

None

None

None

None

None

None

@rhyolight Is the a bug in HTM theory? Or is this not a problem?

Edit: Hang on… My code is behaving weirdly. Maybe a bug somewhere.

In case it helps convince you, here is a video of HTM learning and predicting this sequence

Note that there is a small bug in my HTM implementation that I haven’t yet gone back to correct yet, so behavior in NuPIC will be a bit different after several repetitions (related to the “repeating inputs” issue that I mentioned). I’ll do a recording of this pattern with NuPIC later for comparison when I have a chance.

@Paul_Lamb I’m glad to know it can learn these patterns, and even more so if they can be learned as quickly as you stated on your first post. Thank you for the explanation and demonstration!

@Jose_Cueto Right, this is an important question. I didn’t do a proper research to back what I’m about to answer, but I’ll give some examples to illustrate my point.

There are a lot of ways code can be regular and show a defined behavior for a period of time. In some cases, a pattern is present on the problem itself, but in others, the way the solution is coded lead to suboptimal instructions that follow a pattern, specially when performance is not the focus, which holds true for most of the code produced today.

Example 1: a problem that carries a pattern.

This problem involves painting a table of days with colors that help to identify lines and weekends. See the image below.

This is the C code I’d write to solve it:

Note how the if statements can be easily predicted after some time. If this were repeated for some more months rather than just January (the entire year or more), learning the patterns would become more useful.

Also, note that these patterns can be learned looking only for the history of branches separately, i.e. a local analysis. Of course, this is not always the case.

Example 2: a problem without patterns.

This problem is about counting the number of prime numbers below a certain value. Read the code below. It’s not the most optimized code nor the most readable.

Even though prime numbers are random, there is a pattern in the if statement on line 9. This if statement will evaluate to true only if the break statement on line 7 is not reached.

This time, a global history of instructions is necessary to spot the pattern.

I’ve explored the machine code to show it. See the image below. Only jump instructions and instructions right after jump instructions are shown.

* -> non-jump instruction

@ -> jump instruction without condition

# -> jump instruction with condition (a branch)

Note that the branch instruction highlighted in blue can use previous instructions to predict it’s behaviour after number 4.

If this code kept going to values greater than 19, more and more instructions would separate the patterns pointed in the image, but it would still be there.

What are your thoughts on this analysis? Does anyone see any fault on my reasoning? Next step is testing it.

Something about this statement is bothering me a bit.

HTM will learn a pattern if it has actually seen it. It can’t predict any pattern it has never seen.

If a pattern is novel the HTM system will burst and learn the new branching.

The problem that you are describing - a mostly random sequence - will mostly trigger constant bursts of learning, or in other words, unending anomaly detection. You would get brief periods of “no bursting” that would correspond to a learned snippet.

No worries, it was a question that I was curious about the answer, I was assuming there is some theory about branch prediction you are try to take advantage of using HTM which is a good way to start a problem. Nice illustration by the way and thanks for providing your hypothesis and examples. The gathering and experimenting of the hypotheses are one of the most important parts in the process of finding solutions to problems that are in a highly stochastic and partially observable environments.

In actual cpu execution, heaps and heaps of unrelated programs being executed by the cpu, you probably know this already. For example imagine the permutation of unrelated programs’ instructions including the ones running in the kernel mode are being; context switched non-deterministically, processor threads run in parallel (temporal sequence of inst can be lost here). While the inputs based on the example given is a branching history, these branching code don’t simply form a pattern due to again the nature of the environment or simply put their fate highly depends on the previous sequence of instructions’ sequence. Using only the branching history is like hoping for a pattern will emerge and hoping it will stay for a long time enough to be useful on the next branch prediction, this is because the TM remembers and forgets what it learned based on the inputs it has seen so far.

In some existing implementations/research such as this for example,(https://www.cs.utexas.edu/~lin/papers/hpca01.pdf) they have identified hypotheses that they can take advantage of using a perceptron. For example linear separability of functions which a perceptron can model and its performance increase when number of inputs get larger. The perceptron in this case learns correlations between long histories of branching decision history. However these correlations do not simply mean that they are consistent sequences that an HTM system is happy to store and recall in the future. This could be some invisible (from the human eyes) function learned that doesn’t necessarily generate an observable sequence.

Sorry to sound pessimistic but I think that in order to use the TM at some level of success, the problem or the AI environment must be examined first and conceptually evaluate if a TM is capable of performing in this environment. The TM loves consistent sequences in inputs and gets bored and updates its memory when it finds something more interesting sequence. I’m not discouraging you by the way, this is an encouragement ![]() .

.

One suggestion would be to use a different scheme of inputs, for example provide more instruction context rather than just branching history, or keep and encode chunks of previous instruction histories before the branchings. Good luck with the hardware budget though it will be another challenge in the design.

@Bitking sure, I guess I wasn’t so clear on this statement. I was trying to say that the described behavior of the if statement would reflect on the machine code, and the patterns in this machine code can then be learned over time. Even though prime numbers are random, the process of finding them present some patterns, as observed on the image. That’s what I’m willing to exploit for branch prediction.

@Jose_Cueto thank you for the insights. Maybe I’ll have to make some strong assumptions about the environment and hardware. For example, the TM needs to be aware of the instructions block it’s working with. Unrelated instructions from different programs being switched non-deterministically would really ruin any global analysis if treated as one single thing.

The goal is mostly to exploit patterns when they actually exist. Then, some kinds of applications would benefit a lot more than others. Benchmarks can show where a TM can be more valuable.

The hypotheses for the perceptron is really interesting. I guess this will be one of my most important readings, thanks.

Sure, you made some very good observations, I really appreciate your contribution.

This approach means storing encoded data from registers and main memory, right? Because the instructions themselves don’t really change, so they don’t provide any information. However, this kind of contextual data doesn’t seem to help on a TM. I don’t know if I understood what you’re suggesting.

Hardware will probably be a little bit overlooked in this work. I know it’s an important problem. Actually, so important that it deserves its own work haha.

Generally speaking I was saying to add more context to possibly generate more patterns in the inputs (given hypotheses) rather than just the branching history table. Opcodes don’t change but when coupled with operands they form different combinations which can form patterns eventually. I was suggesting specifically to perhaps use the opcode/operand combination (if hw permits) with the branching history to add more context.