In a nutshell intelligence solves two fundamental problems: patterns identification and patterns utility(reward) classification, like in flight, eat or fight. There are many smart structures on top of those for later consideration.

Identification has pure categorical nature while [multi-class] classification is essentially numerical.

Now, why neural messaging is modeled as either one of two : numerical (optimization, BP) or categorical (trees?+++).

Spike trains (especially sustained ones) are able to perfectly implement both protocols, why those attempts to gradient categoricals and numericals together?

There are two distinct flows of messaging , why cram them into single manifold/bus/wire? Feels like trying to laying out a complex PCB in a single layer… not smart…

Has anybody considered described duality of neural code?

Evolution can do that too, it just takes a long time. Evolution can do just about anything, if you wait long enough.

Intelligence solves one problem: time. The ability to learn new sensory input patterns and new motor output patterns at speed, within a single lifetime, not over multiple generations as evolution would require.

It sounds a bit odd to say ReLU neural networks have dot-product eyes, but then it is rather odd that some animals have compound eyes.

A ReLU network is layer after layer of self selecting dot products.

It is well known that compositions of dot products can be simplified down to one dot product but that kind of hides some aspects of exponentiation and filtering you get layering linear systems. And don’t forget that exponentiation can cause some things to fade away while amplifying other things.

Really though you are just dealing with filtering, exponentiation, eigenvectors-values and switching decisions collapsing to a final square matrix mapping the input vector to a ReLU neural network output vector.

And then you have neural network researchers going around complaining they don’t understand the “simplicity bias” in neural networks where the nets pick out simple patterns that dot-product filtering is sensitive to.

https://ai462qqq.blogspot.com/2023/04/relu-as-switch.html

Like you have these guys here not quite…

https://www.youtube.com/live/HOjpwBqFDrI?feature=share

Thank you for feedback, looks like I could not deliver the question right.

I’ll try to be more specific about “two distinct flows of messaging”.

How about that video:

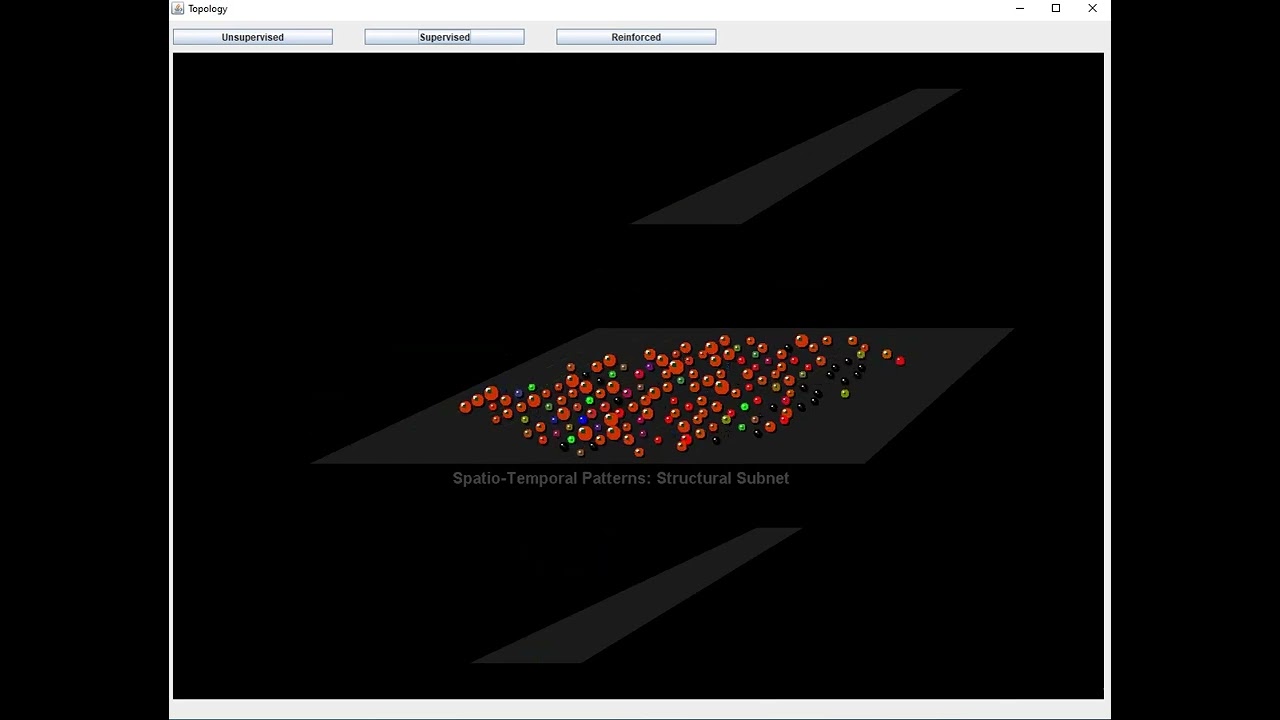

Flows separation removes boundaries between unsupervised, supervised and reinforcement learning paradigms. Modified HTM could go supervised?

Welcome,

You have some interesting questions! You touch on areas of active research and there are quite a few different hypotheses about this stuff.

One reason for confusion is that different parts of the brain are doing different things. For a brief primer I recommend the article: “What are the computations of the cerebellum, the basal ganglia and the cerebral cortex?” by K. Doya, 1999, link to free copy: http://gashler.com/mike/courses/nn/r3/doya1999.pdf

Numenta and their “HTM” theory describe the basic principles of the neural code.

Here are some good references on the topic:

-

“Properties of Sparse Distributed Representations and their Application to Hierarchical Temporal Memory”

Subutai Ahmad and Jeff Hawkins (2015)

Link: https://doi.org/10.48550/arXiv.1503.07469

This article formally introduces the SDR concept and the related equations -

“HTM School”

Matt Taylor (2016)

Link: https://www.youtube.com/playlist?list=PL3yXMgtrZmDqhsFQzwUC9V8MeeVOQ7eZ9

A good introduction to computational neuroscience, covers this material in laymans terms -

“The Representation of Information in the Brain”

Link: https://youtu.be/AWRheJZ_m9A

My own very silly explanation of this topic

One hypothesis about the cerebral cortex is that the neuron activity encodes two things: the categorical information and the level of attention that you should pay to that information. The literal information is encoded by the neurons that spike at least once, and the attention signal is encoded by the number additional spikes in the spike train. In this scheme, the things that you’re paying attention to are a subset of all the things you’re seeing.

We discussed the details of this hypothesis in the thread: L5tt cells are attentional (paper summaries)

I hope this helps!

Thank you!

You have some interesting questions!

I also have some interesting answers, complete blasphemy though.

But those parts serve same main purpose and employ same protocol. Maybe some generalization could help. I’m intentionally trying to stay concise and referring to only absolutely necessary links.

So what I’m trying to get to:

the backbone of the NN comprises neurons with two activation functions, two sets of inputs and [kind of] two sets of outputs. Symbolic-categorical inputs [dendrites] do logical AND comparison, and only if input pattern[s] has specific signature - the neuron “opens up” and feeds numeric [attention/reward/class attribution] inputs into a classic activation function. Opened up neuron send further it’s own symbolic signature. Symbolic and numeric outputs expressed as spike trains, being either matched or summed down by either symbolic or attention/reward nodes.

That process is illustrated by crude video I posted above.

That architecture seems to natively solve continuality, explainability, sparsity, multimodality and bridge stochastic with symbolic.

Here is a link to a video and Github rep with naive implementation:

It continually classify and clusters a few [hyperparam] irregularly sampled sequences [hyper] which I believe is not a trivial task.

Would be interested to hear [hopefully] concise opinions and reviews. Thank you all!

Why complicate needlessly? One type wired in different ways to provide numerous functions, beyond the two you are calling out.

How do you wire a node to either behave as LIF or as AND-gate?

Gated summation is a must. There is [scarce] literature suggesting dendrites implement AND switches. So if a NN constructs layered topology from AND-gates - depending on data - it solves structural plasticity natively. That structural subnet compresses/memorizes input(s) patterns (hierarchically :-), continually and unsupervised).

Add leaking class[es]-rewards attribution to the same structural nodes and you get classification mechanics - as synaptic plasticity.

And it perfectly works in experiments. I’m building (training) LLMs on commodity PCs (OK, 128GB RAM) with 1BLN parameters (100mil nodes) an hour. My “models” classify and generate (not on conscious level - not enough resources).

Natively explainable, continually locally trained, stable to drifts, knowledge transferable - you name it. Funny, but the nets are natively curious - they are getting bored to process same input.

There are possible substructures to enhance that simplified architecture, but generally - two types of info: numerical(statistical) and categorical - defining patterns-entities. Thus, two types of nodes-processors, there could be some more. We know there are 100+ neurotransmitters/modulators employed in a natural brain. And I know how ![]()

Actually, AND gates are the basic mechanism of HTM. We need to see coincidence activation of synapses along a short segment of dendrite to trigger either firing or priming.

This then feeds lateral voting, as described in the TBT paper.

But HTM does not do Supervised or Reinforced, does it?

The learning is strictly based on novelty.

It is inherently unguided learning.

HTM should be thought of as a component in a larger system.

Respectfully, duality of neural processors (as described) doing just great as main and only component of a “larger system”. It is inherently a platform implementing unguided, as well as supervised(guided) and reinforced paradigms. The POC implementation is ~400 lines of [naive] code (bare minimum) :

The problem is that I’m an “indie researcher” (amateur) and after satisfying my curiosity I have no use of the architecture.

Thanks for the conversation! Best!

Why even start a thread if you don’t want (or can’t?) explain anything?

There is no Readme or code in your repo either.

Why be so angry? The code is here: GitHub - MasterAlgo/Simply-Spiking file: [TheCurse.zip]

The logic is at /src/workers folder … everything else is mostly telemetry.

ContinualNeuromorphicTrainerInferer.java - is kind of self-explanatory.

Peace.

Sorry, I am not used to Java.

Anyway, explanation and pseudo code should be in Readme.

I only care for unsupervised part, where is the novelty in how you treat spike trains?

What are these two sets of inputs, they come from different areas, or synapse on different parts of a neuron?

Connections are sparse, if all necessary trains arrive to AND-comparator - it gets activated. If a new input pattern arrives and the NN has no a node to represent it - it takes available from the pull and connects it respectively. Every pattern has corresponding node, random [rare] nodes get destroyed and returned to the pull.

BTW, this way the net supports both distributed (but associated) set of nodes to represent an entity and a single node uniting that set - your favorite Grandmother Cell.

Done on many layers, every layer has it own pull. Something like that. Too lazy to write pseudo code or Readme. Best!

Well, replace AND with some sort of majority gate (which makes more sense), and you seem to have a pretty conventional bare-bones model.

Majority gates trade compactness for precision, but it would work. Conventional what? unsupervised? I support all three (us, su, rl), that’s unconventional. Nice talking to you.

Conventional spiking neuron.

In this model it doesn’t matter where the input comes from: unsupervised from lower cortical areas, supervision from higher cortical areas, or reinforcement from limbic system or brainstem.

In real neurons it does matter, see HTM neuron for distinct layer I feedback, figure 1: A comparative study of HTM and other neural network models for online sequence learning with streaming data | IEEE Conference Publication | IEEE Xplore, and reinforcement is mediated by neuromodulators, such as dopamine, which work in a longer time frame.