Yeah, I suck at explaining my ideas! Let me try again.

The idea is to try and represent knowledge using learn rules that generally look like this:

operator |some ket> => some-superposition

where “operator” is a string, with one interpretation being a labeled link between nodes in a network

|some ket> is called a ket, with one interpretation being a node in a network

and a superposition is a linear combination of kets, with one interpretation being current network state

For example, a couple of simple learn rules:

learn Fred’s friends are Sam, Max, and Rob:

the-friends-of |Fred> => |Sam> + |Max> + |Rob>

learn a shopping list:

the-shopping |list> => 5|apples> + 4|oranges> + |coffee> + |steak> + |chocolate> + |2 litres of milk>

Once the learn rules are loaded into the console, they can be recalled:

eg:

sa: the-friends-of |Fred>

|Sam> + |Max> + |Rob>

sa: the-shopping |list>

5|apples> + 4|oranges> + |coffee> + |steak> + |chocolate> + |2 litres of milk>

Anyway, that is the starting point. The rest of the project is desgined around operators that map superpositions to superpositions. The central motivation being that I think superpositions, and operators, are a natural representation for what is going on in brains, and networks in general. A claim that SDR’s also make. Indeed, part of the relevance of all this to HTM is that superpositions conceptually are very similar to SDR’s. For example:

- In practice most superpositions are sparse, since any ket with coefficient 0 we can drop without changing the meaning of that superposition.

- In some use cases the superpositions have semantics distributed among a collection of kets, and we can randomly drop, or add, other kets and the meaning only changes a bit.

- We have a function that returns the similarity of two superpositions. 1 for exactly the same, 0 for disjoint, values in between otherwise. The HTM analog being the overlap score, used to score the similarity of SDR’s.

A brief demonstration of those properties:

Let’s use an operator that maps strings to letter-ngrams, so we can measure string similarity:

sa: letter-ngrams[1,2,3] |management>

2|m> + 2|a> + 2|n> + |g> + 2|e> + |t> + |ma> + |an> + |na> + |ag> + |ge> + |em> + |me> + |en> + |nt> + |man> + |ana> + |nag> + |age> + |gem> + |eme> + |men> + |ent>

sa: letter-ngrams[1,2,3] |measurement>

2|m> + 3|e> + |a> + |s> + |u> + |r> + |n> + |t> + 2|me> + |ea> + |as> + |su> + |ur> + |re> + |em> + |en> + |nt> + |mea> + |eas> + |asu> + |sur> + |ure> + |rem> + |eme> + |men> + |ent>

- these are sparse, since we omit all ngrams that are not in the seed strings.

- we can measure their similarity using the ket-simm function:

sa: ket-simm(letter-ngrams[1,2,3] |measurement>, letter-ngrams[1,2,3] |management>)

0.478|simm>

ie, 47.8% similarity.

- the spelling similarity is distributed among the kets. We can add or subtract some of these kets but some similarity will remain.

To test this, we first need to find out how many kets are in each superposition (ie, how many on bits in the SDR):

sa: how-many letter-ngrams[1,2,3] |measurement>

|number: 26>

sa: how-many letter-ngrams[1,2,3] |management>

|number: 23>

Now, pick a number less than 23, say 18 on bits, and remeasure similarity:

(where the pick[k] operator returns k random kets from a given superposition)

sa: ket-simm(pick[18] letter-ngrams[1,2,3] |measurement>, pick[18] letter-ngrams[1,2,3] |management>)

0.327|simm>

ie, they are still 32.7% similar.

If we drop back to only 10 on bits, and remeasure similarity:

sa: ket-simm(pick[10] letter-ngrams[1,2,3] |measurement>, pick[10] letter-ngrams[1,2,3] |management>)

0.167|simm>

ie, they are still 16.7% similar.

Now with the background out of the way, we need to tie this all back to high order sequences.

Let’s learn these three sequences:

– count one two three four five six seven

– Fibonacci one one two three five eight thirteen

– factorial one two six twenty-four one-hundred-twenty

– define a superposition/SDR with all 64k bits on:

full |range> => range(|1>,|65536>)

– encode each of our objects into SDR’s with each having only 40 random bits on out of the full 64k:

encode |count> => pick[40] full |range>

encode |one> => pick[40] full |range>

encode |two> => pick[40] full |range>

encode |three> => pick[40] full |range>

encode |four> => pick[40] full |range>

encode |five> => pick[40] full |range>

encode |six> => pick[40] full |range>

encode |seven> => pick[40] full |range>

encode |Fibonacci> => pick[40] full |range>

encode |eight> => pick[40] full |range>

encode |thirteen> => pick[40] full |range>

encode |factorial> => pick[40] full |range>

encode |twenty-four> => pick[40] full |range>

encode |one-hundred-twenty> => pick[40] full |range>

– Here is what these guys look like under the hood, which is clearly a SDR:

(in this case a list of the indices of the on bits)

sa: encode |count>

|48476> + |46200> + |16172> + |43017> + |25301> + |41925> + |14697> + |20684> + |23309> + |45815> + |6938> + |7894> + |37263> + |34500> + |47554> + |41565> + |21080> + |29408> + |63024> + |59305> + |29824> + |11414> + |30627> + |59143> + |61059> + |52893> + |12487> + |7664> + |63961> + |37138> + |6132> + |57766> + |5397> + |41515> + |2481> + |51026> + |33491> + |64623> + |2565> + |50773>

– Next, learn sequences of these objects, using the idea of minicolumns,

– where append-column[k] appends a column to the encoded SDR with all k cells on

– and random-column[k] appends a column with only 1 out of k cells on.

– give the sequence a name:

sequence-number |node 0: *> => |sequence-0>

– start learning the sequence:

pattern |node 0: 0> => random-column[50] encode |count>

then |node 0: 0> => random-column[50] encode |one>

pattern |node 0: 1> => then |node 0: 0>

then |node 0: 1> => random-column[50] encode |two>

pattern |node 0: 2> => then |node 0: 1>

then |node 0: 2> => random-column[50] encode |three>

pattern |node 0: 3> => then |node 0: 2>

then |node 0: 3> => random-column[50] encode |four>

pattern |node 0: 4> => then |node 0: 3>

then |node 0: 4> => random-column[50] encode |five>

pattern |node 0: 5> => then |node 0: 4>

then |node 0: 5> => random-column[50] encode |six>

pattern |node 0: 6> => then |node 0: 5>

then |node 0: 6> => random-column[50] encode |seven>

– start learning the next sequence:

– Fibonacci one one two three five eight thirteen

sequence-number |node 1: *> => |sequence-1>

pattern |node 1: 0> => random-column[50] encode |Fibonacci>

then |node 1: 0> => random-column[50] encode |one>

pattern |node 1: 1> => then |node 1: 0>

then |node 1: 1> => random-column[50] encode |one>

pattern |node 1: 2> => then |node 1: 1>

then |node 1: 2> => random-column[50] encode |two>

pattern |node 1: 3> => then |node 1: 2>

then |node 1: 3> => random-column[50] encode |three>

pattern |node 1: 4> => then |node 1: 3>

then |node 1: 4> => random-column[50] encode |five>

pattern |node 1: 5> => then |node 1: 4>

then |node 1: 5> => random-column[50] encode |eight>

pattern |node 1: 6> => then |node 1: 5>

then |node 1: 6> => random-column[50] encode |thirteen>

– start learning the next sequence:

– factorial one two six twenty-four one-hundred-twenty

sequence-number |node 2: *> => |sequence-2>

pattern |node 2: 0> => random-column[50] encode |factorial>

then |node 2: 0> => random-column[50] encode |one>

pattern |node 2: 1> => then |node 2: 0>

then |node 2: 1> => random-column[50] encode |two>

pattern |node 2: 2> => then |node 2: 1>

then |node 2: 2> => random-column[50] encode |six>

pattern |node 2: 3> => then |node 2: 2>

then |node 2: 3> => random-column[50] encode |twenty-four>

pattern |node 2: 4> => then |node 2: 3>

then |node 2: 4> => random-column[50] encode |one-hundred-twenty>

Once this is all processed and loaded into the console we can ask questions.

(see: http://semantic-db.org/sw-examples/improved-high-order-sequences--saved.sw )

(where “step-k” is an operator that gives a prediction k steps from the input)

sa: step-1 |count>

1.0|one>

sa: step-2 |count>

0.976|two>

sa: step-3 |count>

0.932|three>

sa: step-1 |factorial>

1.0|one>

sa: step-2 |factorial>

1.0|two>

sa: step-3 |factorial>

0.93|six> + 0.07|three>

sa: step-4 |factorial>

0.906|twenty-four>

– without context, we don’t know which sequence “one” is in.

– so 75% chance step-1 is “two” but a 25% chance it is "one"

sa: step-1 |one>

0.75|two> + 0.25|one>

sa: step-2 |one>

0.506|three> + 0.253|six> + 0.241|two>

sa: step-3 |one>

0.259|four> + 0.259|five> + 0.247|twenty-four> + 0.235|three>

And so on. In my opinion a pretty demonstration of HTM style symbolic high order sequence learning.

Heh, not that I’m saying it is useful yet, but it is a starting point.

I hope some of this post made sense! Sorry about the length, and hope it is not too off-topic.

For completeness, this is what the step-k operators look like:

input-encode |*> #=> append-column[50] encode |_self>

step-1 |> #=> drop-below[0.05] similar-input[encode] extract-category then drop-below[0.01] similar-input[pattern] input-encode |_self>

step-2 |> #=> drop-below[0.05] similar-input[encode] extract-category then drop-below[0.01] similar-input[pattern] then drop-below[0.01] similar-input[pattern] input-encode |_self>

step-3 |*> #=> drop-below[0.05] similar-input[encode] extract-category then drop-below[0.01] similar-input[pattern] then drop-below[0.01] similar-input[pattern] then drop-below[0.01] similar-input[pattern] input-encode |_self>

links:

http://semantic-db.org/sw-examples/improved-high-order-sequences.sw

http://semantic-db.org/sw-examples/improved-high-order-sequences--saved.sw

http://write-up.semantic-db.org/201-new-operators-append-column-and-random-column.html

http://write-up.semantic-db.org/202-learning-sequences-htm-style-using-if-then-machines.html

http://write-up.semantic-db.org/203-more-htm-sequence-learning.html

http://write-up.semantic-db.org/204-random-encode-similarity-matrices.html

http://write-up.semantic-db.org/206-naming-htm-sequences.html

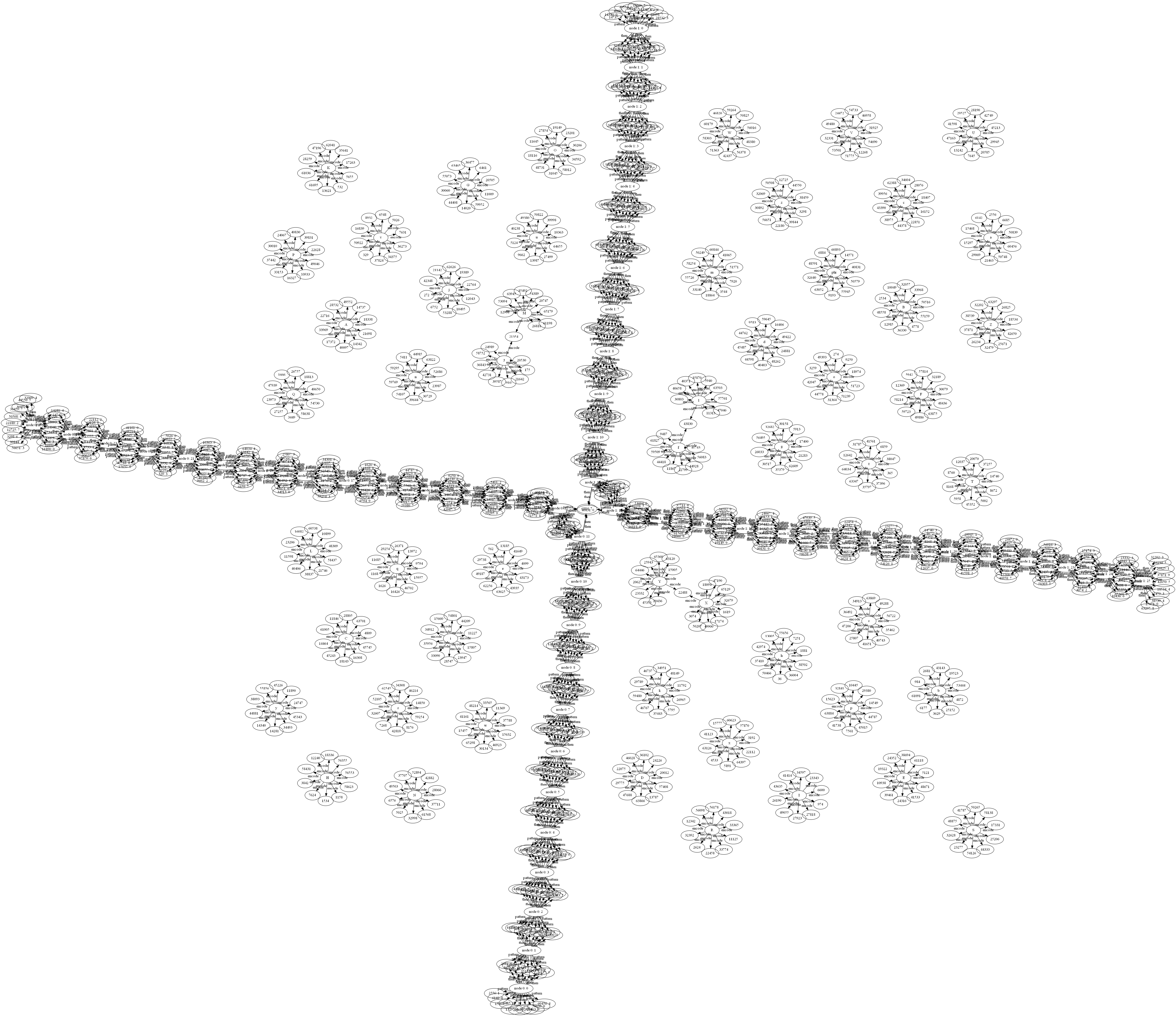

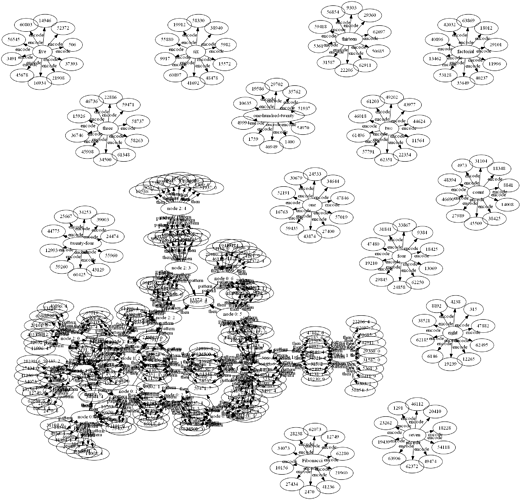

http://write-up.semantic-db.org/207-visualizing-htm-sequences.html