Yes, I have been referring to it as that (the name may not stick however, since this term has been used in HTM’s past to refer to older variations of the TM algorithm, so reusing it could cause confusion).

For this post, I’ll leave out important elements of the theory, such as how activity in this layer is driven by activity in the TM layer, as well as how the long-distance connections work for voting. I’ll be posting a more comprehensive explanation on a separate thread, but I want to stay on topic for this thread.

To keep things simple and on-topic, lets assume an object representation has already been settled on through some external means that I will not describe for now. Lets also not worry about any connections from the TM layer up to the output layer. Instead, we’ll only focus on the apical signal from the output layer down to the TM layer, and how that can be used to address the “repeating inputs” problem we are discussing on this thread.

The basic strategy is to introduce an output/object layer. Activity in this layer will remain stable throughout a sequence for as long as it repeats. The cells in this layer will provide an apical signal to cells in the TM layer. Thus, a cell in the TM layer may become predictive due to the distal signal (from other cells in the TM layer), or it might become predictive due to the apical signal (from cells in the output layer). A cell may also become predictive due to both distal and apical signals.

Each timestep, the winner cells in the TM layer will grow apical connections to the active cells in the output layer, using the exact same learning algorithm as TM (except the cells are growing apical segments rather than distal ones). One could use distal segments for this rather than apical ones (if there were some reason that it was more biologically feasible) – the only requirement is to separate which layer the input is coming from.

Any time a minicolumn is activated, any cell(s) predicted by both apical and distal signals will become the winner. If none are predicted by both signals, then any cell(s) predicted by the distal signal will become the winner. If none are predicted by the distal signal, then any cell(s) predicted by the apical signal will become winner. And of course, if no cells are predicted, the minicolumn will burst.

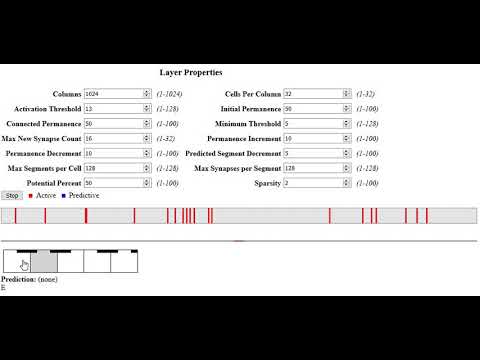

To make things easier to visualize, I’ll be using a tiny TM layer which has 4 cells per minicolumn, and one minicolumn per input. I’ll also be using a single cell in the output layer to represent each object. Obviously in practice, there would be larger dimensions involved. This is just to describe the strategy in the simplest possible manner.

For these visualizations, I am assuming the parameters are set such that the max new synapse count in the TM layer is greater than the activation threshold (one-shot learning), and for the output layer, less than the activation threshold (such that a sequence must be seen twice for it to become connected). I don’t yet know what the best general learning rate should be, but for the below example, “two shot learning” is sufficient to explain the concept without requiring me to draw out too many iterations.

A quick explanation of the symbols and colors:

Let’s begin with learning the repeating sequence A-B-C-D, using this strategy

The first time through the sequence A-B-C-D, the minicolumns burst, winners are chosen, and distal connections are formed as normal. Additionally, the winner cells also grow apical connections with the active cells in the output layer representing object “ABCD”. Note that the learning rate is set low for the apical connections, so after this pass they are connected below the activation threshold.

The second time through the sequence, the first input bursts, and a second representation A’’ is chosen as winner. This one grows both a distal connection to D’, as well as an apical connection to object “ABCD”. This second time through the sequence, B’, C’, and D’ grow additional apical synapses with object “ABCD”, and are now connected above the activation threshold. Note that there are two potential representations for “A” at this point, but neither is connected to object “ABCD” above the activation threshold.

Normally, this would be the point where the “repeating inputs” problem kicks in, and the “B” minicolumns would burst this time through the sequence. However, B’ is now predictive due to the apical signal, so this bursting will not happen. Note that A’’ was predicted distally, which allowed it to become the winner and grow additional apical connections to object “ABCD”. Thus, A’ has lost the competition. You can now repeat the sequence as many times as you want, and it will cycle through the same four representations in the TM layer. Notice that TM has (distally) learned the sequence B’-C’-D’-A’‘, and it is the apical connection which bridges the gap between A’’ and B’.

So what happens when we introduce a new sequence X-B-C-Y? Will this strategy lead to ambiguity like the other strategy? Let’s find out.

The first time through, you see the expected behavior of TM. The previously learned connection between B’ and C’ is activated by bursting in step 2, and a new distal connection between C’ and Y’ is formed in step 4. As in the last scenario, apical connections are formed to object “XBCY”, and they are initially below the activation threshold.

The second time through the sequence, a second representation X’’ is chosen (like we saw for A’’ in the previous example). B’ is activated again, so it grows additional apical connections with object “XBCY”, and is now above the activation threshold. Because B’ was not connected to anything in the previous iteration, this time through the sequence the C minicolumns burst, and a second representation C’’ is chosen. Because of the bursting, Y’ is predicted and becomes active, growing additional apical connections to object “XBCY”. The representation for X’’ is now predicted.

The third time through the sequence, the apical connections are reinforced like we saw in the previous example (they all now breach the activation threshold), and bursting has stopped. X’ and C’ have lost the competition to X’’ and C’‘. You can now repeat the sequence as many times as you want, and it will cycle through the same four representations in the TM layer. There is no ambiguity with the four representations in sequence A-B-C-D. Interestingly, in this case TM has (distally) learned two sequences Y’-X’’ and B’-C’', and it is the apical connection which bridges the two gaps.

Notice also that in the process of learning X-B-C-Y, a stray distal connection between C’ and Y’ was formed. Inputting A-B-C… will now distally predict both D’ and Y’. However, D’ will be predicted both distally and apically, so this could be used by any classification logic to weigh D’ more heavily than Y’ as the next likely input.

I’ll provide pseudo-code and a working demo in a future post (still working out some kinks), but wanted to post a basic introduction to the idea for anyone who is curious. Let me know if something isn’t clear.

I’ll do some further analysis on the “connect to all active cells” strategy to see where it breaks down. My intuition tells me it will create ambiguity in some cases (double sub-sequences for example), but will put together hard proof with visuals.

I’ll do some further analysis on the “connect to all active cells” strategy to see where it breaks down. My intuition tells me it will create ambiguity in some cases (double sub-sequences for example), but will put together hard proof with visuals.

)

)