Hey folks!

Jeff’s talk at the APL last month reinvigorated my interest in HTM, so I’ve spent the last month or so catching up on the current state of things. To help me with that goal, I ended up tinkering on ZHTM after work.

ZHTM is a Python 3 implementation of both a Spatial Pooler and the Temporal Memory algorithm (as defined largely by BAMI). The current implementation uses just base Python; the only dependencies (thus far) are pytest and pytest-mock for testing purposes.

As of earlier today, it is at least possible to feed inputs into the Temporal Memory algorithm repeatedly without obvious errors, so I’m celebrating that milestone by posting here.

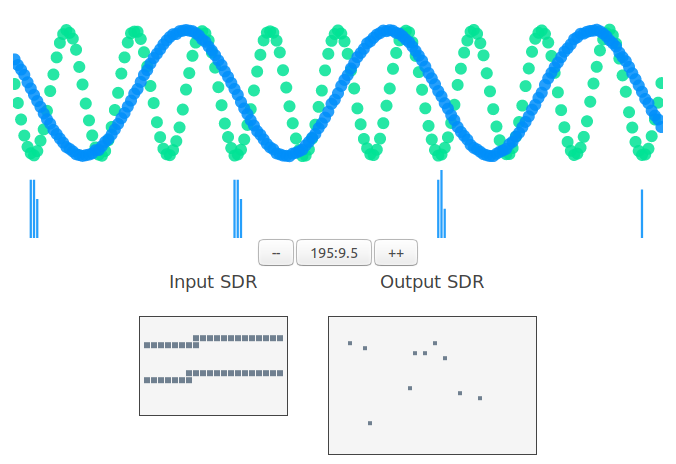

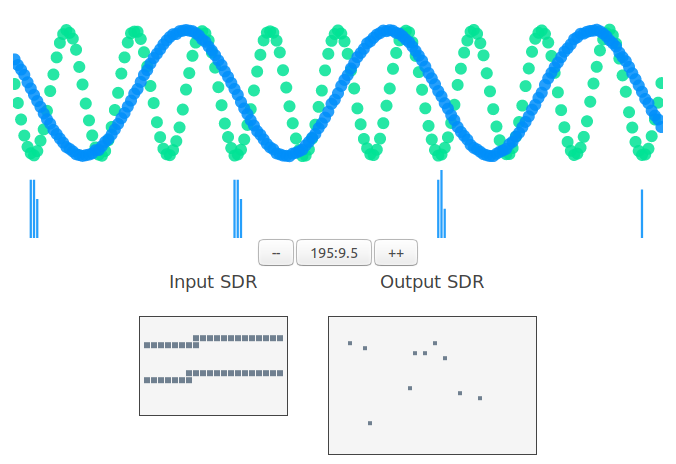

I’ve also got some super-hacky Vue.js visualizations in progress, but they are a significantly less demo-worthy at the moment:

Over the next month, I am hoping to move the system from “doesn’t throw exceptions” into “might be useful” territory:

- Add integration tests to assert that Spatial Pooler and Temporal Memory operate as expected at a higher level than the unit tests currently provide

- Create example systems with known data sets (e.g. the gym or traffic data sets)

- Add 5+ of the basic encoders

- Create demos of at least 3 major components of the system via the web viewer (unpublished)

Anyway, thanks to everyone for your helpful posts and articles. I haven’t said much in the last month, but I appreciate all the knowledge you’ve been sharing.

P.S. Here’s part of the call graph from calling the Temporal Memory algorithm 20 times. I just thought it looked neat.

8 Likes

Thanks for sharing - yet another concrete interpretation of BAMI. Although I try to avoid repeating myself, I’m also slowly writing an HTM framework primarily for learning HTM theory. I tend to learn algorithms better by reading/writing code than text docs or videos.

I thought of using plain objects as well as it would ease the learning process, but I think I will be using a different approach to future proof it. Just to inform you, I have also loosely asked around about how much matrix/linear algebra is used in nupic py and c++. I didnt get much responses, however at this stage, I think that HTM algorithms doesn’t have to use linear algebra libs as much as those existing ML fwks today.

2 Likes

I hear you there! It’s really easy to just skim some code and think that you understand something. Nothing forces you to prove it like making working code.

3 Likes

Quick status update!

I’ve been plugging away at this implementation. Yesterday I managed to run a zhtm system through the anomaly benchmark. This is using raw prediction-error scoring, as I haven’t implemented the ‘anomaly likelihood’ stuff quite yet.

I did discover a pretty big conceptual misunderstanding to work through: I totally misinterpreted how data was passed from the spatial pooler into the temporal memory. Rather than send the spatial pooler’s active columns directly into the temporal memory system, I was re-sampling the spatial pooler’s output, in the same way that the spatial pooler samples the input space. Once I fixed that mistake, the system started behaving itself more like I expected.

Anomaly benchmark

Here are some super preliminary results from the final_results.json file that NAB generates:

{

"null": {

"reward_low_FN_rate": 0.0,

"reward_low_FP_rate": 0.0,

"standard": 0.0

},

"numenta": {

"reward_low_FN_rate": 74.32064726862069,

"reward_low_FP_rate": 61.70812096732759,

"standard": 69.67062607534481

},

"zhtm": {

"reward_low_FN_rate": 73.01327530933908,

"reward_low_FP_rate": 56.10583427353449,

"standard": 66.84749917090518

}

}

Despite not having the likelihood stuff implemented, my system appears to be within the same ballpark as the Numenta detector, which is exciting for me! (You can see the ‘official’ scores for other detectors here, in the section labeled ‘Scoreboard’).

Part of me is worried I’ve done something wrong that is artificially inflating the score, so I’ll be on the lookout for that as I improve and refine the code base.

Brief system config I used:

input SDR

* basic ScalarEncoder

* num_buckets clamped to [150, 1000]

* num_active_bits: 41

spatial pooler

* num_columns: 2048

* stimulus_threshold: 0

* winning_column_count: 41

* min_overlap_duty_cycle_percent = 0.001

* boost_strength = 0.01

* percent_potential = 0.8

temporal memory

* num_columns = 2048

* TMColumn.cell_count = 32

* Cell.new_synapse_count = 15

* Cell.desired_synapses_per_segment = 32

* Cell.distal_segment_activation_threshold = 0

* Cell.distal_segment_matching_threshold = 0

* DendriteSegment.permanence_increment = 0.1

* DendriteSegment.permanence_decrement = 0.05

5 Likes

Hi @Balladeer,

Have you implemented an SDR encoder as well or are you reusing the existing ones in nupic? Asking to get an idea of your implementation. Cheers.

My base SDR is just a wrapper around python set objects. As for encoders, I’ve implemented:

-

scalar encoder, which gets you basic number encoding

-

cycle encoder, which lets you encode categories, with or without neighboring overlap

-

multi encoder, which lets you combine a list of encoders into a single output SDR

With those three, I’ve been able to experiment with quite a bit. My plan is to work on other encoders as I have a need for them.

3 Likes

In your scalar encoder, what does a bucket mean by the way?

A bucket basically represents a range of numbers all at once. Short example:

Say we are encoding the values 1-100, and decide the use 10 buckets. If we use num_active_bits = 1, then our scalar encoder outputs an SDR with ten bits: 0000000000

Each bit represents 1/10 of the input space, so bucket 1 would be on for all values 1-10, bucket 2 would be on for all values 11-20, bucket 3 for 21-30, etc:

- input

01 --> output 1000000000

- input

05 --> output 1000000000

- input

10 --> output 1000000000

- input

11 --> output 0100000000

- input

55 --> output 0000010000

- input

99 --> output 0000000001

This is very similar to how a histogram works, or any other “range banding” generalization: convert a large number of inputs into a smaller number of outputs.

You might look at this test for some more on how the scalar encoder is expected to behave.

You can also check out the section called “A Simple Encoder for Numbers” from the encoder chapter (pdf!) of BAMI, as my encoder basically just implements the one described in that section.

3 Likes

Thanks for taking the time to provide an explanation and for the links. I understand now that bucket is just like a bin in a histogram.